Contents

- KUMA services

- Services tools

- Service resource sets

- Creating a storage

- Creating a correlator

- Creating an event router

- Creating a collector

- Predefined collectors

- Creating an agent

- Creating a set of resources for an agent

- Managing connections for an agent

- Creating an agent service in the KUMA web interface

- Installing an agent in a KUMA network infrastructure

- Automatically created agents

- Update agents

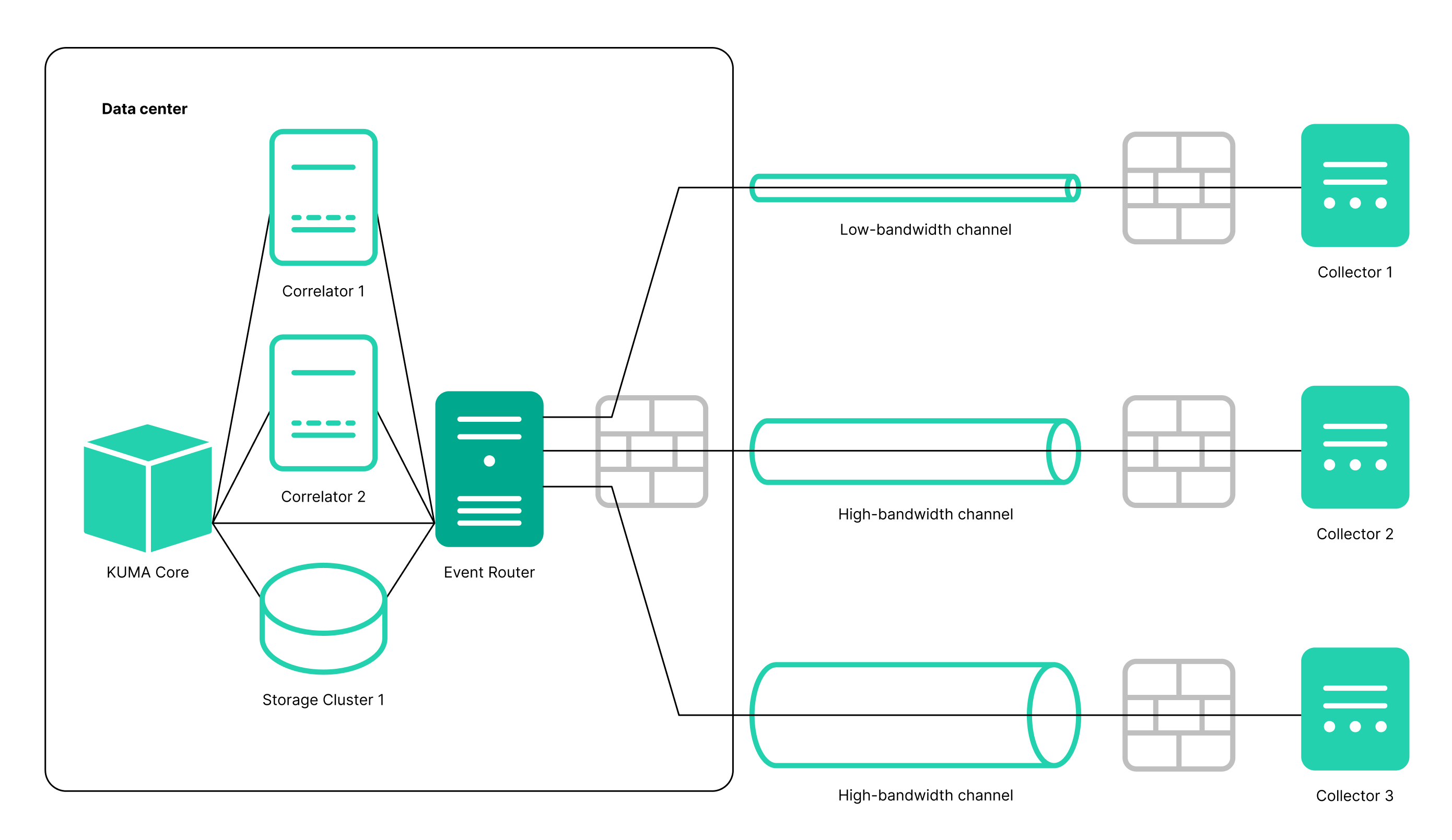

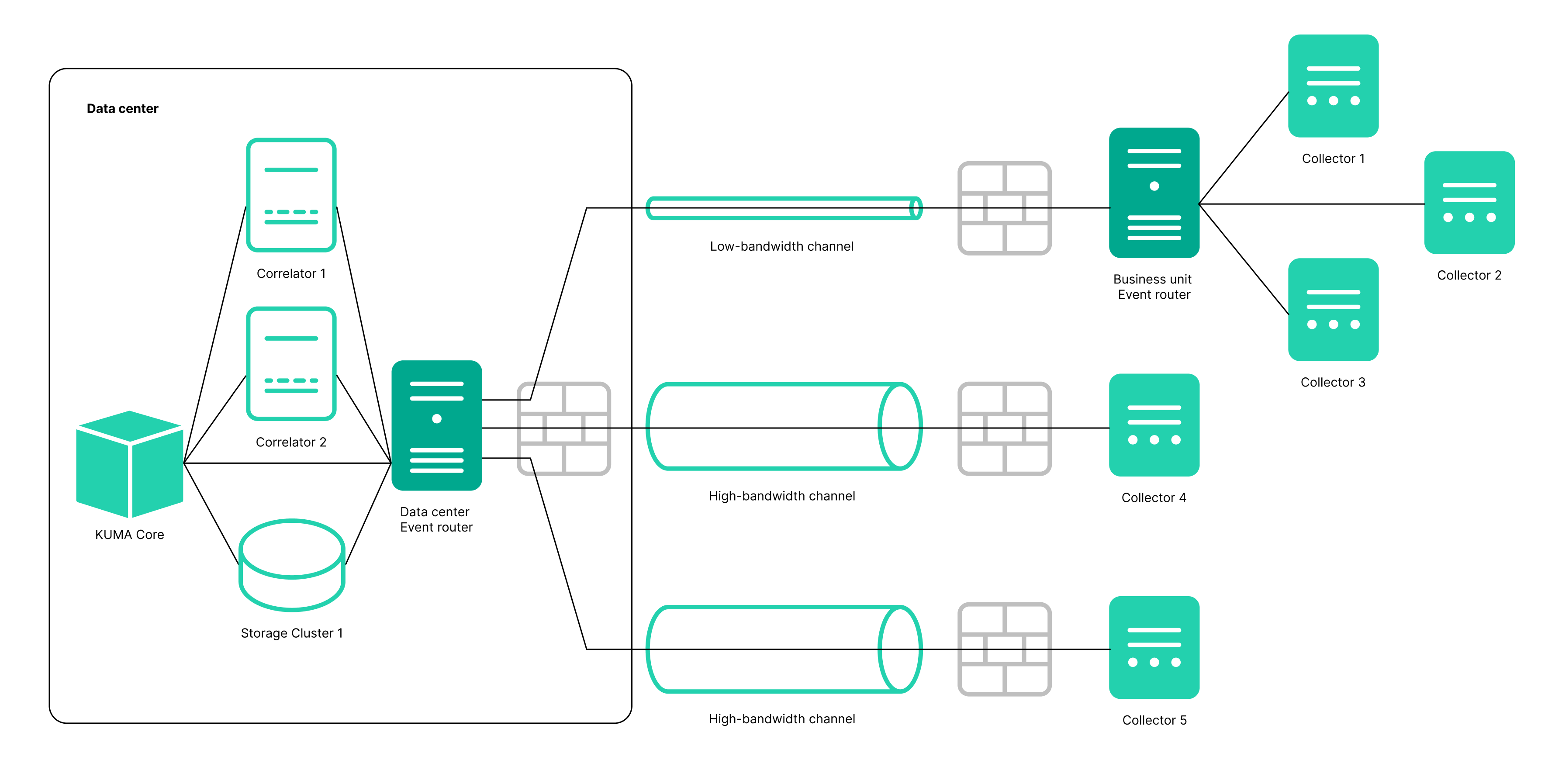

- Transferring events from isolated network segments to KUMA

- Transferring events from Windows machines to KUMA

- AI services

KUMA services

Services are the main components of KUMA that help the system to manage events: services allow you to receive events from event sources and subsequently bring them to a common form that is convenient for finding correlation, as well as for storage and manual analysis. Each service consists of two parts that work together:

- One part of the service is created in the KUMA web interface based on a resource set for services.

- The second part of the service is installed in the network infrastructure where the KUMA system is deployed as one of its components. The server part of a service can consist of multiple instances: for example, services of the same agent or storage can be installed on multiple devices at once.

On the server side, KUMA services are located in the

/opt/kaspersky/kumadirectory.When you install KUMA in high availability mode, only the KUMA Core is installed in the cluster. Collectors, correlators, and storages are hosted on hosts outside of the Kubernetes cluster.

Parts of services are connected to each other via the service ID.

Service types:

- Storages are used to save events.

- Correlators are used to analyze events and search for defined patterns.

- Collectors are used to receive events and convert them to KUMA format.

- Agents are used to receive events on remote devices and forward them to KUMA collectors.

In the KUMA web interface, services are displayed in the Resources → Active services section in table format. The table of services can be updated using the Refresh button and sorted by columns by clicking on the active headers. You can also configure the columns displayed in the table. To do so, click the gear button in the upper-right corner to display a drop-down list. In that drop-down list, select check boxes next to the names of the columns that you want to display in the table. You can leave any single column in the list to be displayed.

The maximum table size is not limited. If you want to select all services, scroll to the end of the table and select the Select all check box, which selects all available services in the table.

Table columns:

- Status—service status:

- Green means the service is running and accessible from the Core server.

- Red means the service is not running or is not accessible from the Core server.

- Yellow is the status that applies to all services except the agent. The yellow status means that the service is running, but there are errors in the service log, or there are alerts for the service from Victoria Metrics. You can view the error message by hovering the mouse cursor over the status of the service in the Active services section.

- Purple is the status that is applied to running services whose configuration file in the database has changed, but that have no other errors. If a service has an incorrect configuration file and has errors, for example, from Victoria Metrics, status of the service is yellow.

- Gray means that if a deleted tenant had a running service that is still running, that service is displayed with a gray status on the Active services page. Services with the gray status are kept when you delete the tenant to let you copy the ID and remove services on your servers. Only the General administrator can delete services with the gray status. When a tenant is deleted, the services of that tenant are assigned to the Main tenant.

- Type—type of service: agent, collector, correlator, storage, event router.

- Name—name of the service. Clicking on the name of the service opens its settings.

- Version—service version.

- Tenant—the name of the tenant that owns the service.

- FQDN—fully qualified domain name of the service server.

- IP address—IP address of the server where the service is installed.

- API port—Remote Procedure Call port number.

- Uptime—Uptime of the service.

- Created—the date and time when the service was created.

- UUID—Unique ID of the service.

By default, this column is not displayed in the table. You can add it to the table by clicking the gear icon in the upper-right part of the table of services and selecting the check box next to the name of the UUID column in the drop-down list.

You can sort data in the table in ascending and descending order, as well as by the Status parameter and by the service type in the Type column. To sort active services, right-click the context menu and select one or more statuses and a type.

You can use the buttons in the upper part of the Services window to perform the following group actions:

- Add service

You can create new services based on existing service resource sets. We do not recommend creating services outside the main tenant without first carefully planning the inter-tenant interactions of various services and users.

- Refresh

You can refresh the list of active services.

- Update configuration

The Update settings button is not available if the KUMA Core service is among the services selected for group actions or if any of the selected services has the grey status. To make the Update settings button available for group actions, clear the check box from the KUMA Core service and services with the grey status.

- Restart

To perform an action with an individual service, right-click the service to display its context menu. The following actions are available:

- Reset certificate

- Delete

- Download log

If you want to receive detailed information, enable the Debug mode in the service settings.

- Copy service ID

You need this ID to install, restart, stop, or delete the service.

- Go to Events

- Go to active lists

- Go to context tables

- Go to partitions

To change a service, select a service under Resources → Active services. This opens a window with a resource set based on which the service was created. You can edit the settings of the resource set and save your changes. To apply the saved changes, restart the service.

If, when changing the settings of a collector resource set, you change or delete conversions in a normalizer connected to it, the edits will not be saved, and the normalizer itself may be corrupted. If you need to modify conversions in a normalizer that is already part of a service, the changes must be made directly to the normalizer under Resources → Normalizers in the web interface.

Services tools

This section describes the tools for working with services available in the Resources → Active services section of the KUMA web interface.

Getting service identifier

The service identifier is used to bind parts of the service residing within KUMA and installed in the network infrastructure into a single complex. An identifier is assigned to a service when it is created in KUMA, and is then used when installing the service to the server.

To get the identifier of a service:

- Log in to the KUMA web interface and open Resources → Active services.

- Select the check box next to the service whose ID you want to obtain, and click Copy ID.

The identifier of the service will be copied to the clipboard. For instance, this ID can be used to install the service on a server.

Page topStopping, starting, checking status of the service

While managing KUMA, you may need to perform the following operations.

- Temporarily stop the service. For example, when restoring the Core from backup, or to edit service settings related to the operating system.

- Start the service.

- Check the status of the service.

The table below lists commands that may be useful while managing KUMA.

Commands for stopping, starting, and checking the status of a service

Service |

Stop service |

Start service |

Check the status of the service |

|---|---|---|---|

Core |

|

|

|

Services with an ID:

|

|

|

|

Services without an ID:

|

|

|

|

Windows agents |

To stop an agent service: 1. Copy the agent ID in the KUMA web interface. 2. Connect to the host on which you want to start the KUMA agent service. 3. Run PowerShell as an account that has administrative privileges. 4. Run the following command in PowerShell:

|

To start an agent service: 1. Copy the agent ID in the KUMA web interface. 2. Connect to the host on which you want to start the KUMA agent service. 3. Run PowerShell as an account that has administrative privileges. 4. Run the following command in PowerShell:

|

To view the status of an agent service: 1. In Windows, go to the Start → Services menu, and in the list of services, double-click the relevant KUMA agent. 2. This opens a window; in that window, view the status of the agent in the Service status field. |

Restarting the service

To restart the service:

- Log in to the KUMA web interface and open Resources → Active services.

- Select the check box next to the service and select the necessary option:

- Update configuration—perform a hot update of a running service configuration. For example, you can change the field mapping settings or the destination point settings this way.

- Restart—stop a service and start it again. This option is used to modify the port number or connector type.

Restarting KUMA agents:

- KUMA Windows Agent can be restarted as described above only if it is running on a remote computer. If the service on the remote computer is inactive, you will receive an error when trying to restart from KUMA. In that case you must restart KUMA Windows Agent service on the remote Windows machine. For information on restarting Windows services, refer to the documentation specific to the operating system version of your remote Windows computer.

- KUMA Agent for Linux stops when this option is used. To start the agent again, you must execute the command that was used to start it.

- Reset certificate—remove certificates that the service uses for internal communication. This option may not be used to renew the Core certificate. To renew KUMA Core certificates, they must be reissued.

Special considerations for deleting Windows agent certificates:

- If the agent has the green status and you select Reset certificate, KUMA deletes the current certificate and creates a new one, the agent continues working with the new certificate.

- If the agent has the red status and you select Reset certificate, KUMA generates an error that the agent is not running. In the %PROGRAMDATA%\Kaspersky Lab\KUMA\agent\<agent ID>\certificates folder, manually delete the internal.cert and internal.key files and start the agent manually. When the agent starts, a new certificate is created automatically.

Special considerations for deleting Linux agent certificates:

- Regardless of the agent status, apply the Reset certificate option in the web interface to delete the certificate in the databases.

- In the agent installation directory, /opt/kaspersky/agent/<Agent ID>/certificates, manually delete the internal.cert and internal.key files.

- Since the Reset certificate option stops the agent, to continue its operation, start the agent manually. When the agent starts, a new certificate is created automatically.

Deleting the service

Before deleting the service get its ID. The ID will be required to remove the service for the server.

To remove a service in the KUMA web interface:

- Log in to the KUMA web interface and open Resources → Active services.

- Select the check box next to the service you want to delete, and click Delete.

A confirmation window opens.

- Click OK.

The service has been deleted from KUMA.

To remove a service from the server, run the following command:

sudo /opt/kaspersky/kuma/kuma <collector/correlator/storage> --id <service ID> --uninstall

The service has been deleted from the server.

Partitions window

If the storage service was created and installed, you can view its partitions in the Partitions table.

To open Partitions table:

- Log in to the KUMA web interface and open Resources → Active services.

- Select the check box next to the relevant storage and click Go to partitions.

The Partitions table opens.

The table has the following columns:

- Tenant—the name of the tenant that owns the stored data.

- Created—partition creation date.

- Space—the name of the space.

- Size—the size of the space.

- Events—the number of stored events.

- Transfer to cold storage—the date when data will be migrated from the ClickHouse clusters to cold storage disks.

- Expires—the date when the partition expires. After this date, the partition and the events it contains are no longer available.

You can delete partitions.

To delete a partition:

- Open the Partitions table (see above).

- Open the

drop-down list to the left from the required partition.

drop-down list to the left from the required partition. - Select Delete.

A confirmation window opens.

- Click OK.

The partition has been deleted. Audit event partitions cannot be deleted.

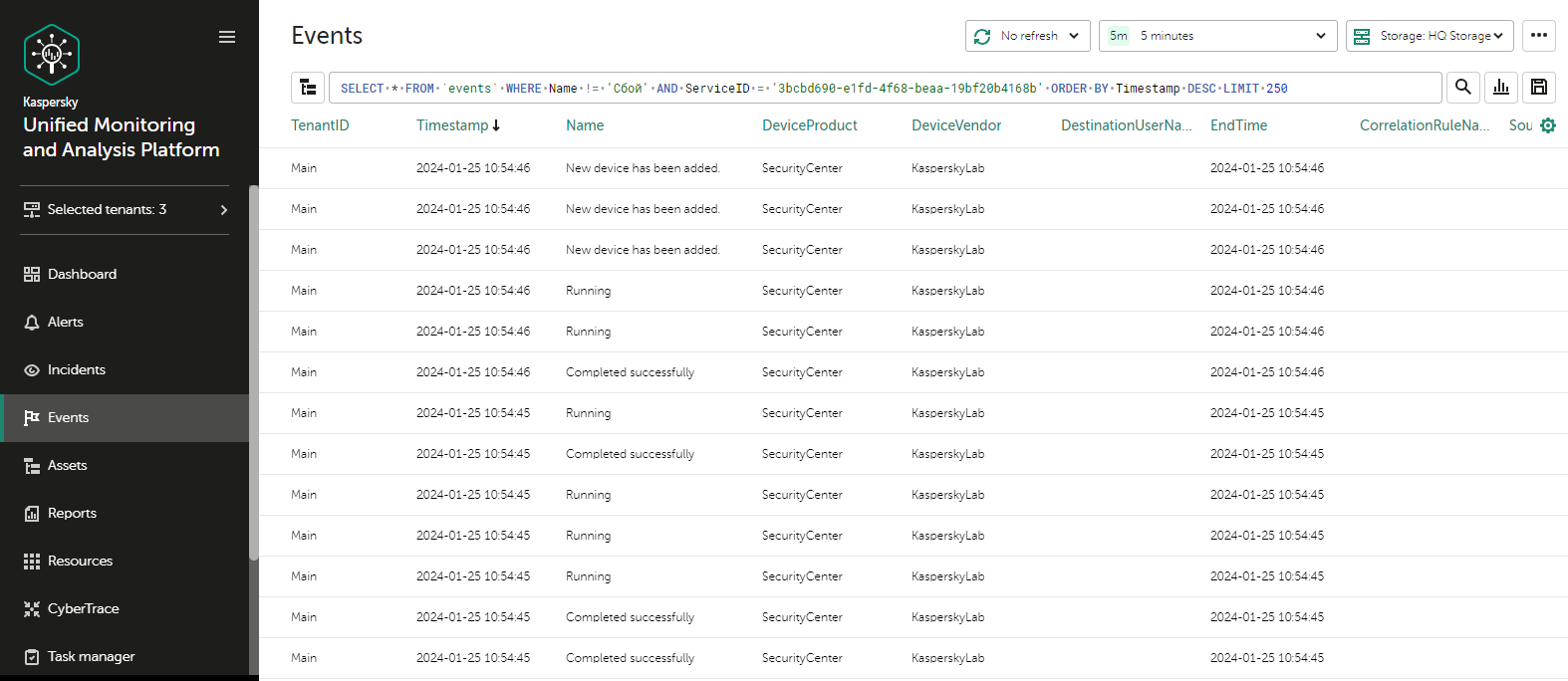

Page topSearching for related events

You can search for events processed by the Correlator or the Collector services.

To search for events related to the Correlator or the Collector service:

- Log in to the KUMA web interface and open Resources → Active services.

- Select the check box next to the required correlator or collector and click Go to Events.

This opens a new browser tab with the KUMA Events section open.

- To find events, click the

icon.

icon.A table with events selected by the search expression

ServiceID = <ID of the selected service> will be displayed.

Event search results

When searching for events, you may encounter the following shard unavailability error:

Code: 279. DB::NetException: All connection tries failed. Log: \\n\\nTimeout exceeded while connecting to socket (host.example.com:port, connection timeout 1000 ms)\\nTimeout exceeded while connecting to socket (host.example.com:port, connection timeout 1000 ms)\\nTimeout exceeded while connecting to socket (host.example.com:port, connection timeout 1000 ms)\\n\\n: While executing Remote. (ALL_CONNECTION_TRIES_FAILED) (version 23.8.8.207)\\n\"}",

In this case, you need to override the ClickHouse configuration in storage settings.

To override the ClickHouse configuration:

- In the KUMA web interface, in the Resources → Storages section, click the storage resource that you want to edit.

This opens the Edit storage window.

- To skip unavailable shards when searching, insert the following lines into the ClickHouse configuration override field:

<profiles>

<default>

<skip_unavailable_shards>1</skip_unavailable_shards>

</default>

</profiles>

- To apply the ClickHouse configuration, click Save.

- Restart the storage services that depend on this resource.

This resolves the shard unavailability error, and you can proceed to search for events processed by a particular correlator or collector.

Page topService resource sets

Service resource sets are a resource type, a KUMA component, a set of settings based on which the KUMA services are created and operate. Resource sets for services are collections of resources.

Any resources added to a resource set must be owned by the same tenant that owns the created resource set. An exception is the shared tenant, whose owned resources can be used in the sets of resources of other tenants.

Resource sets for services are displayed in the Resources → <Resource set type for the service> section of the KUMA web interface. Available types:

- Collectors

- Correlators

- Storages

- Agents

When you select the required type, a table opens with the available sets of resources for services of this type. The resource table contains the following columns:

- Name—the name of a resource set. Can be used for searching and sorting.

- Updated—the date and time of the last update of the resource set. Can be used for sorting.

- Created by—the name of the user who created the resource set.

- Description—the description of the resource set.

Creating a storage

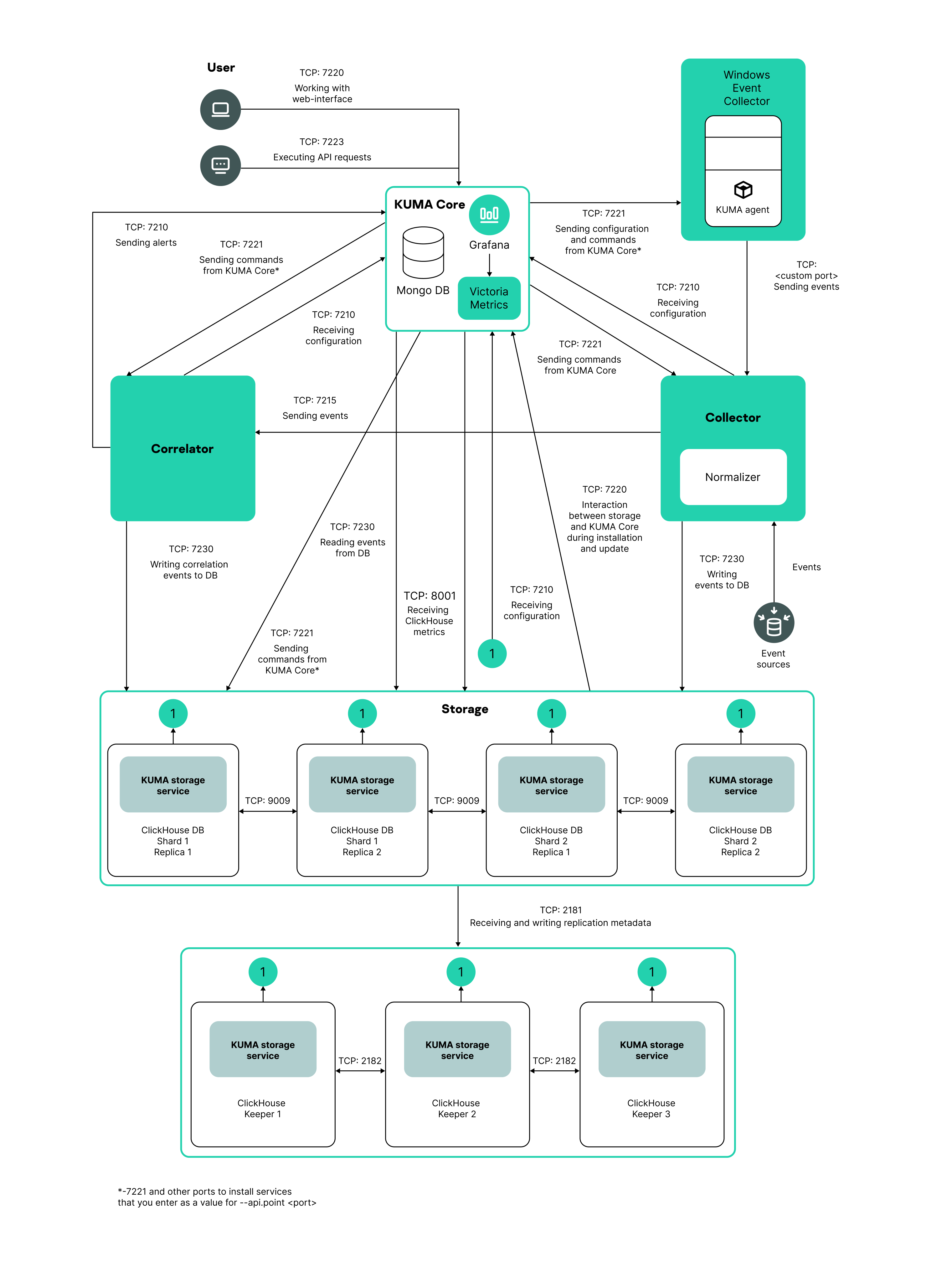

A storage consists of two parts: one part is created inside the KUMA web interface, and the other part is installed on network infrastructure servers intended for storing events. The server part of a KUMA storage consists of ClickHouse nodes collected into a cluster. ClickHouse clusters can be supplemented with cold storage disks.

For each ClickHouse cluster, a separate storage must be installed.

Prior to storage creation, carefully plan the cluster structure and deploy the necessary network infrastructure. When choosing a ClickHouse cluster configuration, consider the specific event storage requirements of your organization.

We recommend using the ext4 file system.

A storage is created in several steps:

- Creating a resource set for a storage in the KUMA web interface

- Creating a storage service in the KUMA web interface

- Installing storage nodes in the network infrastructure

When creating storage cluster nodes, verify the network connectivity of the system and open the ports used by the components.

If the storage settings are changed, the service must be restarted.

ClickHouse cluster structure

A ClickHouse cluster is a logical group of devices that possess all accumulated normalized KUMA events. It consists of one or more logical shards.

A shard is a logical group of devices that possess a specific portion of all normalized events accumulated in the cluster. It consists of one or more replicas. Increasing the number of shards lets you do the following:

- Accumulate more events by increasing the total number of servers and disk space.

- Absorb a larger stream of events by distributing the load associated with an influx of new events.

- Reduce the time taken to search for events by distributing search zones among multiple devices.

A replica is a device that is a member of a logical shard and possesses a single copy of that shard's data. If multiple replicas exist, it means multiple copies exist (the data is replicated). Increasing the number of replicas lets you do the following:

- Improve high availability.

- Distribute the total load related to data searches among multiple machines (although it's best to increase the number of shards for this purpose).

A keeper is a device that participates in coordination of data replication at the whole cluster level. At least one device per cluster must have this role. The recommended number of the devices with this role is 3. The number of devices involved in coordinating replication must be an odd number. The keeper and replica roles can be combined in one machine.

Page topClickHouse cluster node settings

Prior to storage creation, carefully plan the cluster structure and deploy the necessary network infrastructure. When choosing a ClickHouse cluster configuration, consider the specific event storage requirements of your organization.

When creating ClickHouse cluster nodes, verify the network connectivity of the system and open the ports used by the components.

For each node of the ClickHouse cluster, you need to specify the following settings:

- Fully qualified domain name (FQDN)—a unique address to access the node. Specify the entire FQDN, for example,

kuma-storage.example.com. - Shard, replica, and keeper IDs—the combination of these settings determines the position of the node in the ClickHouse cluster structure and the node role.

Node roles

The roles of the nodes depend on the specified settings:

- shard, replica, keeper—the node participates in the accumulation and search of normalized KUMA events and helps coordinate data replication at the cluster-wide level.

- shard, replica—the node participates in the accumulation and search of normalized KUMA events.

- keeper—the node does not accumulate normalized events, but helps coordinate data replication at the cluster-wide level. Dedicated keepers must be specified at the beginning of the list in the Resources → Storages → <Storage> → Basic settings → ClickHouse cluster nodes section.

ID requirements:

- If multiple shards are created in the same cluster, the shard IDs must be unique within this cluster.

- If multiple replicas are created in the same shard, the replica IDs must be unique within this shard.

- The keeper IDs must be unique within the cluster.

Example of ClickHouse cluster node IDs:

- shard 1, replica 1, keeper 1;

- shard 1, replica 2;

- shard 2, replica 1;

- shard 2, replica 2, keeper 3;

- shard 2, replica 3;

- keeper 2.

Cold storage of events

In KUMA, you can configure the migration of legacy data from a ClickHouse cluster to the cold storage. Cold storage can be implemented using the local disks mounted in the operating system or the Hadoop Distributed File System (HDFS). Cold storage is enabled when at least one cold storage disk is specified. If multiple storages are used, a cold storage disk or a HDFS disk must be mounted at the path specified in the storage configuration on each node with data. If a cold storage disk is not configured and the server runs out of disk space in hot storage, the storage service is stopped. If both hot storage and cold storage are configured, and space runs out on the cold storage disk, the KUMA storage service is stopped. We recommend avoiding such situations by adding custom event storage conditions in hot storage.

Cold storage disks can be added or removed. If you have added multiple cold storage disks, data is written to them in a round-robin manner. If data to be written to disk would take up more space than is available on that disk, this data and all subsequent data is written round-robin to the next cold storage disks. If you added only two cold storage disks, the data is written to the drive that has free space left.

After changing the cold storage settings, the storage service must be restarted. If the service does not start, the reason is specified in the storage log.

If the cold storage disk specified in the storage settings has become unavailable (for example, out of order), this may lead to errors in the operation of the storage service. In this case, recreate a disk with the same path (for local disks) or the same address (for HDFS disks) and then delete it from the storage settings.

Rules for moving the data to the cold storage disks

You can configure the storage conditions for events in hot storage of the ClickHouse cluster by setting a limit based on retention time or on maximum storage size. When cold storage is used, every 15 minutses and after each Core restart, KUMA checks if the specified storage conditions are satisfied:

- KUMA gets the partitions for the storage being checked and groups the partitions by cold storage disks and spaces.

- For each space, KUMA checks whether the specified storage condition is satisfied.

- If the condition is satisfied (for example, if the space contains that exceed their retention time, or if the size of the storage has reached or exceeded limit specified in the condition), KUMA transfers all partitions with the oldest date to cold storage disks or deletes these partitions if no cold storage disk is configured or if it is configured incorrectly. This action is repeated while the configured storage condition remains satisfied in the space; for example, if after deleting partitions for a date, the storage size still exceeds the maximum size specified in the condition.

KUMA generates audit events when data transfer starts and ends, or when data is removed.

- If retention time is configured in KUMA, whenever partitions are transferred to cold storage disks, it is checked whether the configured conditions are satisfied on the disk. If events are found on the disk that have been stored for longer than the Event retention time, which is counted from the moment the events were received in KUMA, the solution deletes these events or all partitions for the oldest date.

KUMA generates audit events when it deletes data.

If the ClickHouse cluster disks are 95% full, the biggest partitions are automatically moved to the cold storage disks. This can happen more often than once per hour.

During data transfer, the storage service remains operational, and its status stays green in the Resources → Active services section of the KUMA web interface. When you hover over the status icon, a message is displayed about the data transfer. When a cold storage disk is removed, the storage service has the yellow status.

Special considerations for storing and accessing events

- When using HDFS disks for cold storage, protect your data in one of the following ways:

- Configure a separate physical interface in the VLAN, where only HDFS disks and the ClickHouse cluster are located.

- Configure network segmentation and traffic filtering rules that exclude direct access to the HDFS disk or interception of traffic to the disk from ClickHouse.

- Events located in the ClickHouse cluster and on the cold storage disks are equally available in the KUMA web interface. For example, when you search for events or view events related to alerts.

- You can disable the storage of events or audit events on cold storage disks. To do so, specify the following in storage settings:

- If you do not want to store events on cold storage disks, do one of the following:

- If in the Storage condition options field, you have a gigabyte or percentage based storage condition selected, in the Event retention time, specify

0. - If in the Storage condition options field, you have a storage condition in days, in the Event retention time field, specify the same number of days as in the Storage condition options field.

- If in the Storage condition options field, you have a gigabyte or percentage based storage condition selected, in the Event retention time, specify

- If you do not want to store audit events on cold storage disks, in the Audit cold retention period field, specify

0(days).

- If you do not want to store events on cold storage disks, do one of the following:

Special considerations for using HDFS disks

- Before connecting HDFS disks, create directories for each node of the ClickHouse cluster on them in the following format:

<HDFS disk host>/<shard ID>/<replica ID>. For example, if a cluster consists of two nodes containing two replicas of the same shard, the following directories must be created:- hdfs://hdfs-example-1:9000/clickhouse/1/1/

- hdfs://hdfs-example-1:9000/clickhouse/1/2/

Events from the ClickHouse cluster nodes are migrated to the directories with names containing the IDs of their shard and replica. If you change these node settings without creating a corresponding directory on the HDFS disk, events may be lost during migration.

- HDFS disks added to storage operate in the JBOD mode. This means that if one of the disks fails, access to the storage will be lost. When using HDFS, take high availability into account and configure RAID, as well as storage of data from different replicas on different devices.

- The speed of event recording to HDFS is usually lower than the speed of event recording to local disks. The speed of accessing events in HDFS, as a rule, is significantly lower than the speed of accessing events on local disks. When using local disks and HDFS disks at the same time, the data is written to them in turn.

- HDFS is used only as distributed file data storage of ClickHouse. Compression mechanisms of ClickHouse, not HDFS, are used to compress data.

- The ClickHouse server must have write access to the corresponding HDFS storage.

Removing cold storage disks

Before physically disconnecting cold storage disks, remove these disks from the storage settings.

To remove a disk from the storage settings:

- In the KUMA web interface, under Resources → Storages, select the relevant storage.

This opens the storage.

- In the window, in the Disks for cold storage section, in the required disk's group of settings, click Delete disk.

Data from removed disk is automatically migrated to other cold storage disks or, if there are no such disks, to the ClickHouse cluster. While data is being migrated, the status icon of the storage turns yellow and an hourglass icon is displayed. Audit events are generated when data transfer starts and ends.

- After event migration is complete, the disk is automatically removed from the storage settings. It can now be safely disconnected.

Removed disks can still contain events. If you want to delete them, you can manually delete the data partitions using the DROP PARTITION command.

If the cold storage disk specified in the storage settings has become unavailable (for example, out of order), this may lead to errors in the operation of the storage service. In this case, create a disk with the same path (for local disks) or the same address (for HDFS disks) and then delete it from the storage settings.

Page topDetaching, archiving, and attaching partitions

If you want to optimize disk space and speed up queries in KUMA, you can detach data partitions in ClickHouse, archive partitions, or move partitions to a drive. If necessary, you can later reattach the partitions you need and perform data processing.

Detaching partitions

To detach partitions:

- Determine the shard on all replicas of which you want to detach the partition.

- Get the partition ID using the following command:

sudo /opt/kaspersky/kuma/clickhouse/bin/client.sh -d kuma --multiline --query "SELECT partition, name FROM system.parts;" |grep 20231130In this example, the command returns the partition ID for November 30, 2023.

- One each replica of the shard, detach the partition using the following command and specifying the partition ID:

sudo /opt/kaspersky/kuma/clickhouse/bin/client.sh -d kuma --multiline --query "ALTER TABLE events_local_v2 DETACH PARTITION ID '<partition ID>'"

As a result, the partition is detached on all replicas of the shard. Now you can move the data directory to a drive or archive the partition.

Archiving partitions

To archive detached partitions:

- Find the detached partition in disk subsystem of the server:

sudo find /opt/kaspersky/kuma/clickhouse/data/ -name <ID of the detached partition>\* Change to the 'detached' directory that contains the detached partition, and while in the 'detached' directory, perform the archival:sudo cd <path to the 'detached' directory containing the detached partition>sudo zip -9 -r detached.zip *For example:

sudo cd /opt/kaspersky/kuma/clickhouse/data/store/d5b/d5bdd8d8-e1eb-4968-95bd-d8d8e1eb3968/detached/sudo zip -9 -r detached.zip *

The partition is archived.

Attaching partitions

To attach archived partitions to KUMA:

- Increase the Retention period value.

KUMA deletes data based on the date specified in the Timestamp field, which records the time when the event is received, and based on the Retention period value that you set for the storage.

Before restoring archived data, make sure that the Retention period value overlaps the date in the Timestamp field. If this is not the case, the archived data will be deleted within 1 hour.

- Place the archive partition in the 'detached' section of your storage and extract the archive:

sudounzip detached.zip -d<path to the 'detached' directory>For example:

sudounzip detached.zip -d/opt/kaspersky/kuma/clickhouse/data/store/d5b/d5bdd8d8-e1eb-4968-95bd-d8d8e1eb3968/detached/ - Run the command to attach the partition:

sudo /opt/kaspersky/kuma/clickhouse/bin/client.sh -d kuma --multiline --query "ALTER TABLE events_local_v2 ATTACH PARTITION ID '<partition ID>'"Repeat the steps of extracting the archive and attaching the partition on each replica of the shard.

As a result, the archived partition is attached and its events are again available for search.

Page topCreating a set of resources for a storage

In the KUMA web interface, a storage service is created based on the resource set for the storage.

To create a resource set for a storage in the KUMA web interface:

- In the KUMA web interface, under Resources → Storages, click Add storage.

This opens the Create storage window.

- On the Basic settings tab, in the Storage name field, enter a unique name for the service you are creating. The name must contain 1 to 128 Unicode characters.

- In the Tenant drop-down list, select the tenant that will own the storage.

- In the Tags drop-down list, select the tags for the resource set that you are creating.

The list includes all available tags created in the tenant of the resource and in the Shared tenant. You can find a tag in the list by typing its name in the field. If the tag you entered does not exist, you can press Enter or click Add to create it.

- You can optionally add up to 256 Unicode characters describing the service in the Description field.

- In the Storage condition options field, select an event storage condition in the ClickHouse cluster for the storage, which, when satisfied, will cause events to be transferred to cold storage disks or deleted if cold storage is not configured or is configured incorrectly. The condition is applied to the default space and to events from deleted spaces.

By default, ClickHouse moves events to cold storage disks or deletes them if more than 97% of the storage is full. KUMA also applies an additional 365 days storage condition when creating a storage. You can configure custom storage conditions for more stable performance of the storage.

To set the storage condition, do one of the following:

- If you want to limit the storage period for events, select Days from the drop-down list, and in the field, specify the maximum event storage period (in days) in the ClickHouse hot storage cluster.

After the specified period, events are automatically transferred to cold storage disks or deleted from the ClickHouse cluster, starting with the partitions with the oldest date. The minimum value is 1. The default value is 365.

- If you want to limit the maximum storage size, select GB from the drop-down list, and in the field, specify the maximum storage size in gigabytes.

When the storage reaches the specified size, events are automatically transferred to cold storage disks or deleted from the ClickHouse cluster, starting with the partitions with the oldest date. The minimum value and default value is 1.

- If you want to limit the storage size to a percentage of disk space that is available to the storage (according to VictoriaMetrics), select Percentage from the drop-down list, and in the field, specify the maximum storage size as a percentage of the available disk space. In this case, the condition can also be triggered when the disk space available to the storage is decreased.

When the storage reaches the specified percentage of disk space available to it, events are automatically transferred to cold storage disks or deleted from the ClickHouse cluster, starting with the partitions with the oldest date. Possible values: 1 to 95. The default value is 80. If you want to use percentages for all storage spaces, the sum total of percentages in the conditions of all spaces may not exceed 95, but we recommend specifying a limit of at most 90% for the entire storage or for individual spaces.

We do not recommend specifying small percentage values because this increases the probability of data loss in the storage.

For [OOTV] Storage, the default event storage period is 2 days. If you want to use this storage, you can change the event storage condition for it, if necessary.

- If you want to limit the storage period for events, select Days from the drop-down list, and in the field, specify the maximum event storage period (in days) in the ClickHouse hot storage cluster.

- If you want to use an additional storage condition, click Add storage condition and specify an additional storage condition as described in step 6.

The maximum number of conditions is two, and you can combine only conditions the following types:

- Days and storage size in GB

- Days and storage size as a percentage

If you want to delete a storage condition, click the X icon next to this condition.

- In the Audit retention period field, specify the period, in days, to store audit events. The minimum value and default value is

365. - If cold storage is required, specify the event storage term:

- Event retention time specifies the total KUMA event storage duration in days, counting from the moment when the event is received. When the specified period expires, events are automatically deleted from the cold storage disk. The default value is 0.

The event retention time is calculated as the sum of the event retention time in the ClickHouse hot storage cluster until the condition specified in the Storage condition options setting is triggered, and the event retention time on the cold storage disk. After one of storage conditions is triggered, the data partition for the earliest date is moved to the cold storage disk, and there it remains until the event retention time in KUMA expires.

Depending on the specified storage condition, the resulting retention time is as follows:

- If you specified a storage condition in days, the Event retention time must be strictly greater than the number of days specified in the storage condition. You can calculate the cold retention period for events as the Event retention time minus the number of days specified in the Storage condition options setting.

If you do not want to store events on the cold storage disk, you can specify the same number of days in the Event retention time field as in the storage condition.

- If you specified the storage condition in terms of disk size (absolute or percentage), the minimum value of the Event retention time is 1. The cold storage duration for events is calculated as Event retention time minus the number of days from the receipt of the event to triggering of the condition and the disk partition filling up, but until the condition is triggered, calculating an exact duration is impossible. In this case, we recommend specifying a relatively large value for Event retention time to avoid events being deleted.

If you do not want to store events on the cold storage disk, you can set Event retention time to 0.

- If you specified a storage condition in days, the Event retention time must be strictly greater than the number of days specified in the storage condition. You can calculate the cold retention period for events as the Event retention time minus the number of days specified in the Storage condition options setting.

- Audit cold retention period—the number of days to store audit events. The minimum value is 0.

The Event retention time and Audit cold retention period settings become available only after at least one cold storage disk has been added.

- Event retention time specifies the total KUMA event storage duration in days, counting from the moment when the event is received. When the specified period expires, events are automatically deleted from the cold storage disk. The default value is 0.

- If you want to change ClickHouse settings, in the ClickHouse configuration override field, paste the lines with settings from the ClickHouse configuration XML file /opt/kaspersky/kuma/clickhouse/cfg/config.xml. Specifying the root elements <yandex>, </yandex> is not required. Settings passed in this field are used instead of the default settings.

Example:

<merge_tree>

<parts_to_delay_insert>600</parts_to_delay_insert>

<parts_to_throw_insert>1100</parts_to_throw_insert>

</merge_tree>

- Use the Debug toggle switch to specify whether resource logging must be enabled. If you want to only log errors for all KUMA components, disable debugging. If you want to get detailed information in the logs, enable debugging.

- If necessary, in the ClickHouse cluster nodes section, add ClickHouse cluster nodes to the storage.

There can be multiple nodes. You can add nodes by clicking the Add node button or remove nodes by clicking the X icon of the relevant node.

Available settings:

- In the FQDN field, enter the fully qualified domain name of the node that you want to add. For example,

kuma-storage-cluster1-server1.example.com. - In the Shard ID, Replica ID, and Keeper ID fields, specify the role of the node in the ClickHouse cluster. The shard and keeper IDs must be unique within the cluster, the replica ID must be unique within the shard. The following example shows how to populate the ClickHouse cluster nodes section for a storage with dedicated keepers in a distributed installation. You can adapt this example according to your needs.

Example:

ClickHouse cluster nodes

FQDN: kuma-storage-cluster1-server1.example.com

Shard ID: 0

Replica ID: 0

Keeper ID: 1

FQDN: kuma-storage-cluster1server2.example.com

Shard ID: 0

Replica ID: 0

Keeper ID: 2

FQDN: kuma-storage-cluster1server3.example.com

Shard ID: 0

Replica ID: 0

Keeper ID: 3

FQDN: kuma-storage-cluster1server4.example.com

Shard ID: 1

Replica ID: 1

Keeper ID: 0

FQDN: kuma-storage-cluster1server5.example.com

Shard ID: 1

Replica ID: 2

Keeper ID: 0

FQDN: kuma-storage-cluster1server6.example.com

Shard ID: 2

Replica ID: 1

Keeper ID: 0

FQDN: kuma-storage-cluster1server7.example.com

Shard ID: 2

Replica ID: 2

Keeper ID: 0

- In the FQDN field, enter the fully qualified domain name of the node that you want to add. For example,

- If necessary, in the Spaces section, add spaces to the storage to distribute the stored events.

There can be multiple spaces. You can add spaces by clicking the Add space button or remove nodes by clicking the X icon of the relevant space.

Available settings:

- In the Name field, specify a name for the space containing 1 to 128 Unicode characters.

- In the Storage condition options field, select an event storage condition in the ClickHouse cluster for the space, which, when satisfied, will cause events to be transferred to cold storage disks or deleted if cold storage is not configured or is configured incorrectly. KUMA applies the 365 days storage condition when a space is added.

To set the storage condition for a space, do one of the following:

- If you want to limit the storage period for events, select Days from the drop-down list, and in the field, specify the maximum event storage period (in days) in the ClickHouse hot storage cluster.

After the specified period, events are automatically transferred to cold storage disks or deleted from the ClickHouse cluster, starting with the partitions with the oldest date. The minimum value is 1. The default value is 365.

- If you want to limit the maximum storage space size, select GB from the drop-down list, and in the field, specify the maximum space size in gigabytes.

When the space reaches the specified size, events are automatically transferred to cold storage disks or deleted from the ClickHouse cluster, starting with the partitions with the oldest date. The minimum value and default value is 1.

- If you want to limit the space size to a percentage of disk space that is available to the storage (according to VictoriaMetrics), select Percentage from the drop-down list, and in the field, specify the maximum space size as a percentage of the size of the disk available to the storage. In this case, the condition can also be triggered when the disk space available to the storage is decreased.

When the space reaches the specified percentage of disk space available to the storage, events are automatically transferred to cold storage disks or deleted from the ClickHouse cluster, starting with the partitions with the oldest date. Possible values: 1 to 95. The default value is 80. If you want to use percentages for all storage spaces, the sum total of percentages in the conditions of all spaces may not exceed 95, but we recommend specifying a limit of at most 90% for the entire storage or for individual spaces.

We do not recommend specifying small percentage values because this increases the probability of data loss in the storage.

When using size as the storage condition, you must ensure that the total size of all spaces specified in the storage conditions does not exceed the physical size of the storage, otherwise an error will be displayed when starting the service.

In storage conditions with a size limitation, use the same units of measure for all spaces of a storage (only gigabytes or only percentage values). Otherwise, if the condition is specified as a percentage for one space, and in gigabytes for another space, the storage may overflow due to mismatch of values, leading to data loss.

- If you want to limit the storage period for events, select Days from the drop-down list, and in the field, specify the maximum event storage period (in days) in the ClickHouse hot storage cluster.

- If you want to make a space inactive if it is outdated and no longer relevant, select the Read only check box.

This prevents events from going into that space. To make the space active again, clear the Read only check box. This check box is cleared by default.

- If necessary, in the Event retention time field, specify the total KUMA event storage duration in days, counting from the moment when the event is received. When the specified period expires, events are automatically deleted from the cold storage disk. The default value is 0.

The event retention time is calculated as the sum of the event retention time in the ClickHouse hot storage cluster until the condition specified in the Storage condition options setting is triggered, and the event retention time on the cold storage disk. After one of storage conditions is triggered, the data partition for the earliest date is moved to the cold storage disk, and there it remains until the event retention time in KUMA expires.

Depending on the specified storage condition, the resulting retention time is as follows:

- If you specified a storage condition in days, the Event retention time must be strictly greater than the number of days specified in the storage condition. The cold storage duration for events is calculated as the Event retention time minus the number of days specified in the Storage condition options setting.

If you do not want to store events from this space on the cold storage disk, you can specify the same number of days in the Event retention time field as in the storage condition.

- If you specified the storage condition in terms of disk size (absolute or percentage), the minimum value of the Event retention time is 1. The cold storage duration for events is calculated as Event retention time minus the number of days from the receipt of the event to triggering of the condition and the disk partition filling up, but until the condition is triggered, calculating an exact duration is impossible. In this case, we recommend specifying a relatively large value for Event retention time to avoid events being deleted.

If you do not want to store events from this space on the cold storage disk, you can set Event retention time to 0.

The Event retention time setting becomes available only after adding at least one cold storage disk.

- If you specified a storage condition in days, the Event retention time must be strictly greater than the number of days specified in the storage condition. The cold storage duration for events is calculated as the Event retention time minus the number of days specified in the Storage condition options setting.

- In the Filter settings section, you can specify conditions to identify events that will be put into this space. To create a new filter, in the Filter drop-down list, select an existing filter or Create new.

After the service is created, you can view and delete spaces in the storage resource settings.

There is no need to create a separate space for audit events. Events of this type (Type=4) are automatically placed in a separate Audit space with a storage term of at least 365 days. This space cannot be edited or deleted from the KUMA web interface.

- If necessary, in the Disks for cold storage section, add to the storage the disks where you want to transfer events from the ClickHouse cluster for long-term storage.

There can be multiple disks. You can add disks by clicking the Add disk button and remove them by clicking the Delete disk button.

Available settings:

- In the FQDN drop-down list, select the type of domain name of the disk you are connecting:

- Local—for the disks mounted in the operating system as directories.

- HDFS—for the disks of the Hadoop Distributed File System.

- In the Name field, specify the disk name. The name must contain 1 to 128 Unicode characters.

- If you select the Local domain name type for the disk, specify the absolute directory path of the mounted local disk in the Path field. The path must begin and end with a "/" character.

- If you select HDFS domain name type for the disk, specify the path to HDFS in the Host field. For example,

hdfs://hdfs1:9000/clickhouse/.

- In the FQDN drop-down list, select the type of domain name of the disk you are connecting:

- Go to the Advanced settings tab and fill in the following fields:

- In the Buffer size field, enter the buffer size in bytes at which events must be sent to the database. The default value is 128 MB. No maximum value is configured. If the virtual machine has less free RAM than the specified Buffer size, KUMA sets the limit to 128 MB.

If the expected traffic to the storage service is greater than 1 Gbps, we recommend changing the Buffer size setting (in bytes) to the expected value and setting the Buffer flush interval to 2.

The value of the Buffer size setting can be determined empirically. KUMAIf the Insert QPS metric of the storage is greater than 1 and you see that a queue of requests building up, follow the recommendations for calculating and adjusting the Buffer size setting in the Insert QPS section provided in the Viewing KUMA metrics article.

- In the Maximum buffer size field, specify the maximum buffer size in bytes. The default value is 256 MB.

By default, events are written to the database once per second. If the EPS rises and events are registered more often than once per second, the buffer size is automatically increased. The buffer is increased up to three times the Buffer size value, but cannot exceed the Maximum buffer size value. When the load goes back to normal, the buffer size stays the same; there is no automatic reduction of buffer size. If the Maximum buffer size is less than or equal to the Buffer size, the buffer size does not change.

Restarting the storage service resets the increased buffer size to the value in the Buffer size field.

- In the Buffer flush interval field, enter the time in seconds for which KUMA waits for the buffer to fill up. If the buffer is not full, but the specified time has passed, KUMA sends events to the database. The default value is 1 second.

- In the Required memory size field, specify the minimum amount of free RAM in bytes. The default value is 128 MB.

If the node on which the storage is installed has less free RAM than this setting, database insert request returns an error, and the events are recorded later. Limiting the memory size allows avoiding the situation when the memory fills up if, for any reason, the recording of events in the database is blocked and data begins to accumulate in memory.

- In the Disk buffer size limit field, enter a value in bytes. The disk buffer is used to temporarily store events that could not be sent for further processing or storage. If the disk space allocated for the disk buffer is exhausted, events are rotated as follows: new events replace the oldest events written to the buffer. The default value is 10 GB.

- Use the Disk buffer toggle switch to enable or disable the disk buffer. By default, the disk buffer is enabled.

- Use the Write to local database table toggle switch to enable or disable writing to the local database table. Writing is disabled by default.

If enabled, data is written only on the host on which the storage is located. We recommend using this functionality only if you have configured balancing on the collector and/or correlator — at step 6. Routing, in the Advanced settings section, the URL selection policy field is set to Round robin.

If you disable writing, the data is distributed across the shards of the cluster.

- If necessary, use the Debug toggle switch to enable logging of service operations.

- You can use the Create dump periodically toggle switch at the request of Technical Support to generate resource (CPU, RAM, etc.) utilization reports in the form of dumps.

- In the Dump settings field, you can specify the settings to be used when creating dumps. For instructions on filling out this field, please contact Technical Support.

- In the Buffer size field, enter the buffer size in bytes at which events must be sent to the database. The default value is 128 MB. No maximum value is configured. If the virtual machine has less free RAM than the specified Buffer size, KUMA sets the limit to 128 MB.

The set of resources for the storage is created and is displayed under Resources → Storages. Now you can create a storage service.

Page topCreating a storage service in the KUMA web interface

When a resource set is created for a storage, you can proceed to create a storage service in KUMA.

To create a storage service in the KUMA web interface:

- In the KUMA web interface, under Resources → Active services, click Add service.

- In the opened Choose a service window, select the resource set that you just created for the storage and click Create service.

The storage service is created in the KUMA web interface and is displayed under Resources → Active services. Now storage services must be installed to each node of the ClickHouse cluster by using the service ID.

Page topInstalling a storage in the KUMA network infrastructure

To create a storage:

- Log in to the server where you want to install the service.

- Execute the following command:

sudo /opt/kaspersky/kuma/kuma storage --core https://<KUMA Core server FQDN>:<port used by KUMA Core for internal communication (port 7210 by default)> --id <service ID copied from the KUMA web interface> --installExample:

sudo /opt/kaspersky/kuma/kuma storage --core https://kuma.example.com:7210 --id XXXXX --installWhen deploying several KUMA services on the same host, during the installation process you must specify unique ports for each component using the

--api.port <port>parameter. The following setting values are used by default:--api.port 7221. - Repeat steps 1–2 for each storage node.

Only one storage service can be installed on a host.

The storage is installed.

Page topCreating a correlator

A correlator consists of two parts: one part is created inside the KUMA web interface, and the other part is installed on the network infrastructure server intended for processing events.

Actions in the KUMA web interface

A correlator is created in the KUMA web interface by using the Installation Wizard, which combines the necessary resources into a resource set for the correlator. Upon completion of the Wizard, the service is automatically created based on this resource set.

To create a correlator in the KUMA web interface:

Start the Correlator Installation Wizard:

- In the KUMA web interface, under Resources, click Create correlator.

- In the KUMA web interface, under Resources → Correlators, click Add correlator.

As a result of completing the steps of the Wizard, a correlator service is created in the KUMA web interface.

A resource set for a correlator includes the following resources:

- Correlation rules

- Enrichment rules (if required)

- Response rules (if required)

- Destinations (normally one for sending events to a storage)

These resources can be prepared in advance, or you can create them while the Installation Wizard is running.

Actions on the KUMA correlator server

If you are installing the correlator on a server that you intend to use for event processing, you need to run the command displayed at the last step of the Installation Wizard on the server. When installing, you must specify the identifier automatically assigned to the service in the KUMA web interface, as well as the port used for communication.

Testing the installation

After creating a correlator, it is recommended to make sure that it is working correctly.

Starting the Correlator Installation Wizard

To start the Correlator Installation Wizard:

- In the KUMA web interface, under Resources, click Add correlator.

- In the KUMA web interface, under Resources → Correlators, click Add correlator.

Follow the instructions of the Wizard.

Aside from the first and last steps of the Wizard, the steps of the Wizard can be performed in any order. You can switch between steps by using the Next and Previous buttons, as well as by clicking the names of the steps in the left side of the window.

After the Wizard completes, a resource set for the correlator is created in the KUMA web interface under Resources → Correlators, and a correlator service is added under Resources → Active services.

Step 1. General correlator settings

This is a required step of the Installation Wizard. At this step, you specify the main settings of the correlator: the correlator name and the tenant that will own it.

To specify the general settings of the correlator:

- On the Basic settings tab, fill in the following fields:

- In the Name field, enter a unique name for the service you are creating. The name must contain 1 to 128 Unicode characters.

- In the Tenant drop-down list, select the tenant that will own the correlator. The tenant selection determines what resources will be available when the collector is created.

- If you return to this window from another subsequent step of the Installation Wizard and select another tenant, you will have to manually edit all the resources that you have added to the service. Only resources from the selected tenant and shared tenant can be added to the service.

- If required, specify the number of processes that the service can run concurrently in the Workers field. By default, the number of worker processes is the same as the number of vCPUs on the server where the service is installed.

- You can optionally add up to 256 Unicode characters describing the service in the Description field.

- On the Advanced settings tab, fill in the following fields:

- If necessary, use the Debug toggle switch to enable logging of service operations.

- You can use the Create dump periodically toggle switch at the request of Technical Support to generate resource (CPU, RAM, etc.) utilization reports in the form of dumps.

- In the Dump settings field, you can specify the settings to be used when creating dumps. The specifics of filling in this field must be provided by Technical Support.

General settings of the correlator are specified. Proceed to the next step of the Installation Wizard.

Page topStep 2. Global variables

If tracking values in event fields, active lists, or dictionaries is not enough to cover some specific security scenarios, you can use global and local variables. You can use them to take various actions on the values received by the correlators by implementing complex logic for threat detection. Variables can be assigned a specific function and then queried from correlation rules as if they were ordinary event fields, with the triggered function result received in response.

To add a global variable in the correlator,

click the Add variable button and specify the following parameters:

- In the Variable window, enter the name of the variable.

- In the Value window, enter the variable function.

When entering functions, you can use autocomplete as a list of hints with possible function names, their brief description and usage examples. You can select a function from the list and insert it together with its list of arguments into the input field.

To display the list of all hints in the field, press Ctrl+Space. Press Enter to select a function from the list. Press Tab to go to the next argument in the list of arguments of the selected function.

The global variable is added. It can be queried from correlation rules by adding the $ character in front of the variable name. There can be multiple variables. Added variables can be edited or deleted by using the  icon.

icon.

Proceed to the next step of the Installation Wizard.

Page topStep 3. Correlation

This is an optional but recommended step of the Installation Wizard. On the Correlation tab of the Installation Wizard, select or create correlation rules. These resources define the sequences of events that indicate security-related incidents. When these sequences are detected, the correlator creates a correlation event and an alert.

If you have added global variables to the correlator, all added correlation rules can query them.

Correlation rules that are added to the resource set for the correlator are displayed in the table with the following columns:

- Correlation rules—name of the correlation rule resource.

- Type—type of correlation rule: standard, simple, operational. The table can be filtered based on the values of this column by clicking the column header and selecting the relevant values.

- Actions—list of actions that will be performed by the correlator when the correlation rule is triggered. These actions are indicated in the correlation rule settings. The table can be filtered based on the values of this column by clicking the column header and selecting the relevant values.

Available values:

- Output—correlation events created by this correlation rule are transmitted to other correlator resources: enrichment, response rule, and then to other KUMA services.

- Edit active list—the correlation rule changes the active lists.

- Loop to correlator—the correlation event is sent to the same correlation rule for reprocessing.

- Categorization—the correlation rule changes asset categories.

- Event enrichment—the correlation rule is configured to enrich correlation events.

- Do not create alert—when a correlation event is created as a result of a correlation rule triggering, no alert is created for that. If you do not want to create an alert when a correlation rule is triggered, but you still want to send a correlation event to the storage, select the Output and No alert check boxes. If you select only the No alert check box, a correlation event is not saved in the storage.

- Shared resource—the correlation rule or the resources used in the correlation rule are located in a shared tenant.

You can use the Search field to search for a correlation rule. Added correlation rules can be removed from the resource set by selecting the relevant rules and clicking Delete.

Selecting a correlation rule opens a window with its settings, which can be edited and then saved by clicking Save. If you click Delete in this window, the correlation rule is unlinked from the resource set.

Use the Move up and Move down buttons to change the position of the selected correlation rules in the table. It affects their execution sequence when events are processed. Using the Move operational to top button, you can move correlation rules of the operational type to the beginning of the correlation rules list.

To link the existing correlation rules to the resource set for the correlator:

- Click Link.

The resource selection window opens.

- Select the relevant correlation rules and click OK.

The correlation rules will be linked to the resource set for the correlator and will be displayed in the rules table.

To create a new correlation rule in a resource set for a correlator:

- Click Add.

The correlation rule creation window opens.

- Specify the correlation rule settings and click Save.

The correlation rule will be created and linked to the resource set for the correlator. It is displayed in the correlation rules table and in the list of resources under Resources → Correlation rules.

Proceed to the next step of the Installation Wizard.

Page topStep 4. Enrichment

This is an optional step of the Installation Wizard. On the Enrichment tab of the Installation Wizard, you can select or create enrichment rules and indicate which data from which sources you want to add to correlation events that the correlator creates. There can be more than one enrichment rule. You can add them by clicking the Add button and can remove them by clicking the  button.

button.

To add an existing enrichment rule to a resource set:

- Click Add.

This opens the enrichment rule settings block.

- In the Enrichment rule drop-down list, select the relevant resource.

The enrichment rule is added to the resource set for the correlator.

To create a new enrichment rule in a resource set:

- Click Add.

This opens the enrichment rule settings block.

- In the Enrichment rule drop-down list, select Create new.

- In the Source kind drop-down list, select the source of data for enrichment and define its corresponding settings:

- Use the Debug toggle switch to indicate whether or not to enable logging of service operations. Logging is disabled by default.

- In the Filter section, you can specify conditions to identify events that will be processed using the enrichment rule. You can select an existing filter from the drop-down list or create a new filter.

The new enrichment rule was added to the resource set for the correlator.

Proceed to the next step of the Installation Wizard.

Page topStep 5. Response

This is an optional step of the Installation Wizard. On the Response tab of the Installation Wizard, you can select or create response rules and indicate which actions must be performed when the correlation rules are triggered. There can be multiple response rules. You can add them by clicking the Add button and can remove them by clicking the  button.

button.

To add an existing response rule to a resource set:

- Click Add.

The response rule settings window opens.

- In the Response rule drop-down list, select the relevant resource.

The response rule is added to the resource set for the correlator.

To create a new response rule in a resource set:

- Click Add.

The response rule settings window opens.

- In the Response rule drop-down list, select Create new.

- In the Type drop-down list, select the type of response rule and define its corresponding settings:

- KSC response—response rules for automatically launching the tasks on Kaspersky Security Center assets. For example, you can configure automatic startup of a virus scan or database update.

Tasks are automatically started when KUMA is integrated with Kaspersky Security Center. Tasks are run only on assets that were imported from Kaspersky Security Center.

- Run script—response rules for automatically running a script. For example, you can create a script containing commands to be executed on the KUMA server when selected events are detected.

The script file is stored on the server where the correlator service using the response resource is installed: /opt/kaspersky/kuma/correlator/<Correlator ID>/scripts.

The

kumauser of this server requires the permissions to run the script. - KEDR response—response rules for automatically creating prevention rules, starting network isolation, or starting the application on Kaspersky Endpoint Detection and Response and Kaspersky Security Center assets.

Automatic response actions are carried out when KUMA is integrated with Kaspersky Endpoint Detection and Response.

- Response via KICS/KATA—response rules for automatically starting tasks on KICS for Networks assets. For example, you can change the asset status in KICS for Networks.

Tasks are automatically started when KUMA is integrated with KICS for Networks.

- Response via Active Directory—response rules for changing the permissions of Active Directory users. For example, block a user.

Tasks are started if integration with Active Directory is configured.

- KSC response—response rules for automatically launching the tasks on Kaspersky Security Center assets. For example, you can configure automatic startup of a virus scan or database update.

- In the Workers field, specify the number of processes that the service can run simultaneously.

By default, the number of workers is the same as the number of virtual processors on the server where the service is installed.

This field is optional.

- In the Filter section, you can specify conditions to identify events that will be processed using the response rule. You can select an existing filter from the drop-down list or create a new filter.

The new response rule was added to the resource set for the correlator.

Proceed to the next step of the Installation Wizard.

Page topStep 6. Routing

This is an optional step of the Installation Wizard. On the Routing tab of the Installation Wizard, you can select or create destinations with settings indicating the forwarding destination of events created by the correlator. Events from a correlator are usually redirected to storage so that they can be saved and later viewed if necessary. Events can be sent to other locations as needed. There can be more than one destination point.

To add an existing destination to a resource set for a correlator:

- In the Add destination drop-down list, select the type of destination resource you want to add:

- Select Storage if you want to configure forwarding of processed events to the storage.

- Select Correlator if you want to configure forwarding of processed events to a correlator.

- Select Other if you want to send events to other locations.

This type of resource includes correlator and storage services that were created in previous versions of the application.

The Add destination window opens where you can specify parameters for events forwarding.

- In the Destination drop-down list, select the necessary destination.

The window name changes to Edit destination, and it displays the settings of the selected resource. The resource can be opened for editing in a new browser tab using the

button.

button. - Click Save.

The selected destination is displayed on the Installation Wizard tab. A destination resource can be removed from the resource set by selecting it and clicking Delete in the opened window.

To add a new destination to a resource set for a correlator:

- In the Add destination drop-down list, select the type of destination resource you want to add:

- Select Storage if you want to configure forwarding of processed events to the storage.

- Select Correlator if you want to configure forwarding of processed events to a correlator.

- Select Other if you want to send events to other locations.

This type of resource includes correlator and storage services that were created in previous versions of the application.

The Add destination window opens where you can specify parameters for events forwarding.

- Specify the settings on the Basic settings tab:

- In the Destination drop-down list, select Create new.

- In the Name field, enter a unique name for the destination resource. The name must contain 1 to 128 Unicode characters.

- Use the Disabled toggle button to specify whether events will be sent to this destination. By default, sending events is enabled.

- Select the Type for the destination resource:

- Select storage if you want to configure forwarding of processed events to the storage.

- Select correlator if you want to configure forwarding of processed events to a correlator.

- Select nats-jetstream, tcp, http, kafka, or file if you want to configure sending events to other locations.

- Specify the URL to which events should be sent in the hostname:<API port> format.

You can specify multiple destination addresses using the URL button for all types except nats-jetstream and file.

- For the nats-jetstream and kafka types, use the Topic field to specify which topic the data should be written to. The topic must contain Unicode characters. The Kafka topic is limited to 255 characters.

- If necessary, specify the settings on the Advanced settings tab. The available settings vary based on the selected destination resource type:

- Compression is a drop-down list where you can enable Snappy compression. By default, compression is disabled.

- Proxy is a drop-down list for proxy server selection.

- The Buffer size field is used to set buffer size (in bytes) for the destination. The default value is 1 MB, and the maximum value is 64 MB.

- Timeout field is used to set the timeout (in seconds) for another service or component response. The default value is

30. - Disk buffer size limit field is used to specify the size of the disk buffer in bytes. The default size is 10 GB.

- Cluster ID is the ID of the NATS cluster.

- TLS mode is a drop-down list where you can specify the conditions for using TLS encryption:

- Disabled (default)—do not use TLS encryption.

- Enabled—encryption is enabled, but without verification.

- With verification—use encryption with verification that the certificate was signed with the KUMA root certificate. The root certificate and key of KUMA are created automatically during application installation and are stored on the KUMA Core server in /opt/kaspersky/kuma/core/certificates/.

When using TLS, it is impossible to specify an IP address as a URL.

- URL selection policy is a drop-down list in which you can select a method for determining which URL to send events to if several URLs have been specified:

- Any. Events are sent to one of the available URLs as long as this URL receives events. If the connection is broken (for example, the receiving node is disconnected) a different URL will be selected as the events destination.

- Prefer first. Events are sent to the first URL in the list of added addresses. If it becomes unavailable, events are sent to the next available node in sequence. When the first URL becomes available again, events start to be sent to it again.

- Balanced means that packages with events are evenly distributed among the available URLs from the list. Because packets are sent either on a destination buffer overflow or on the flush timer, this URL selection policy does not guarantee an equal distribution of events to destinations.

- Delimiter is used to specify the character delimiting the events. By default,

\nis used. - Path—the file path if the file destination type is selected.

- Buffer flush interval—this field is used to set the time interval (in seconds) at which the data is sent to the destination. The default value is

100. - Workers—this field is used to set the number of services processing the queue. By default, this value is equal to the number of vCPUs of the KUMA Core server.

- You can set health checks using the Health check path and Health check timeout fields. You can also disable health checks by selecting the Health Check Disabled check box.

- Debug—a toggle switch that lets you specify whether resource logging must be enabled. By default, this toggle switch is in the Disabled position.

- The Disk buffer disabled drop-down list is used to enable or disable the use of a disk buffer. By default, the disk buffer is disabled.

- In the Filter section, you can specify the conditions to define events that will be processed by this resource. You can select an existing filter from the drop-down list or create a new filter.

- Click Save.

The created destination is displayed on the Installation Wizard tab. A destination resource can be removed from the resource set by selecting it and clicking Delete in the opened window.

Proceed to the next step of the Installation Wizard.

Page topStep 7. Setup validation

This is the required, final step of the Installation Wizard. At this step, KUMA creates a service resource set, and the Services are created automatically based on this set:

- The resource set for the correlator is displayed under Resources → Correlators. It can be used to create new correlator services. When this resource set changes, all services that operate based on this resource set will start using the new parameters after the services restart. To do so, you can use the Save and restart services and Save and update service configurations buttons.