Installing and removing KUMA

Expand all | Collapse all

To install KUMA, you need the distribution kit:

- kuma-ansible-installer-<build number>.tar.gz contains all necessary files for installing KUMA without the support for high availability configurations.

- kuma-ansible-installer-ha-<build number>.tar.gz contains all necessary files for installing KUMA in a high availability configuration.

To complete the installation, you need the install.sh installer file and an inventory file that describes your infrastructure. You can create an inventory file based on a template. Each distribution contains an install.sh installer file and the following inventory file templates:

- single.inventory.yml.template

- distributed.inventory.yml.template

- expand.inventory.yml.template

- k0s.inventory.yml.template

KUMA keeps its files in the /opt directory, so we recommend making /opt a separate partition and allocating 16 GB for the operating system and the remainder of the disk space for the /opt partition.

KUMA is installed in the same way on all hosts using the installer and your prepared inventory file in which you describe your configuration. We recommend taking time to think through the setup before you proceed.

The following installation options are available:

- Installation on a single server

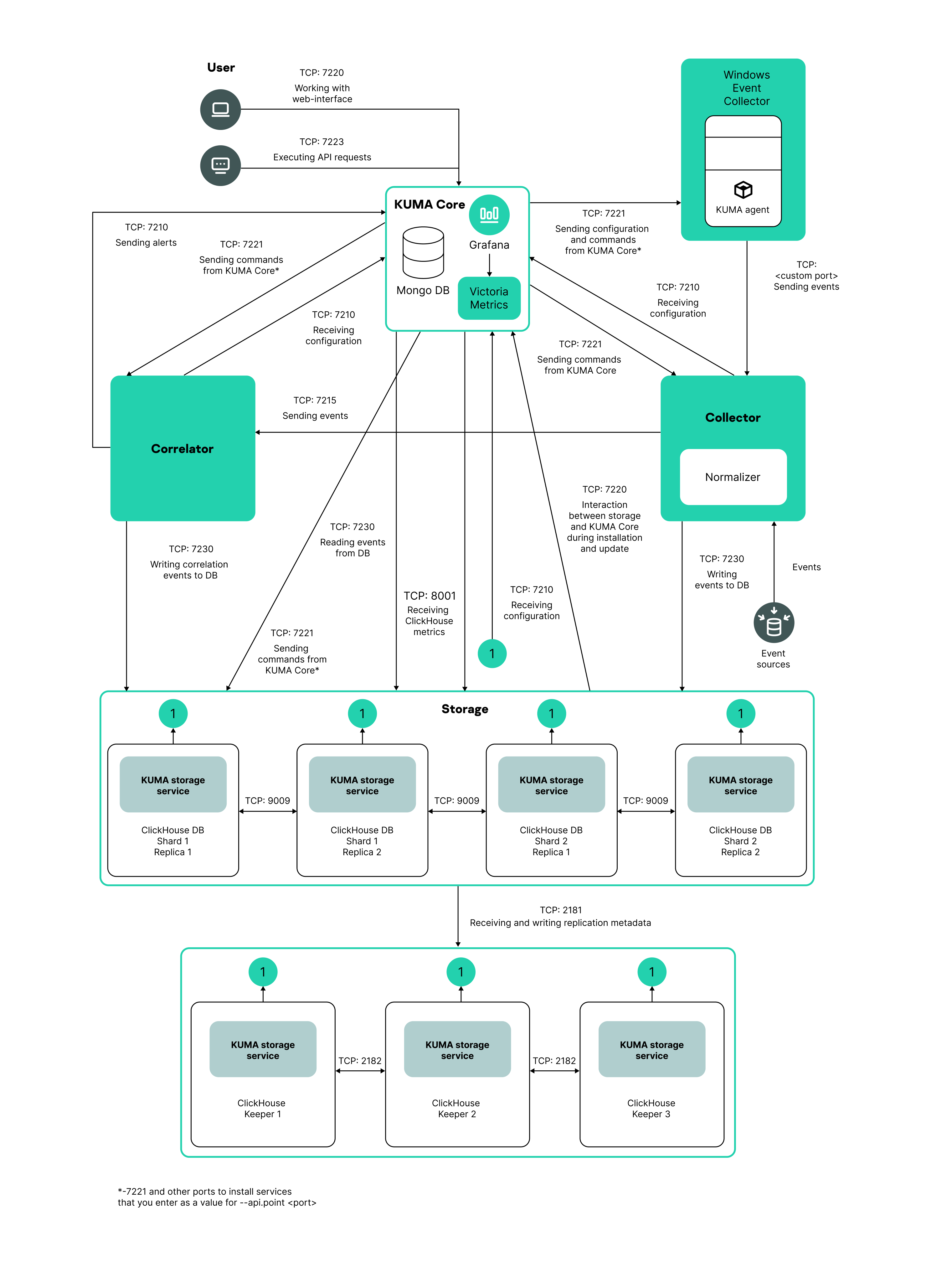

Single-server installation diagram

Installation on a single server

Example inventory file for installation on a single server

all:

vars:

deploy_to_k8s: false

need_transfer: false

generate_etc_hosts: false

deploy_example_services: true

no_firewall_actions: false

kuma:

vars:

ansible_connection: ssh

ansible_user: root

children:

kuma_core:

hosts:

kuma1.example.com:

mongo_log_archives_number: 14

mongo_log_frequency_rotation: daily

mongo_log_file_size: 1G

kuma_collector:

hosts:

kuma1.example.com

kuma_correlator:

hosts:

kuma1.example.com

kuma_storage:

hosts:

kuma1.example.com:

shard: 1

replica: 1

keeper: 1

You can install all KUMA components on the same server: specify the same server in the single.inventory.yml inventory file for all components. An "all-in-one" installation can handle a small stream of events, up to 10,000 EPS. If you plan to use many dashboard layouts and process a lot of search queries, a single server might not be sufficient. In that case, we recommend the distributed installation.

- Distributed installation

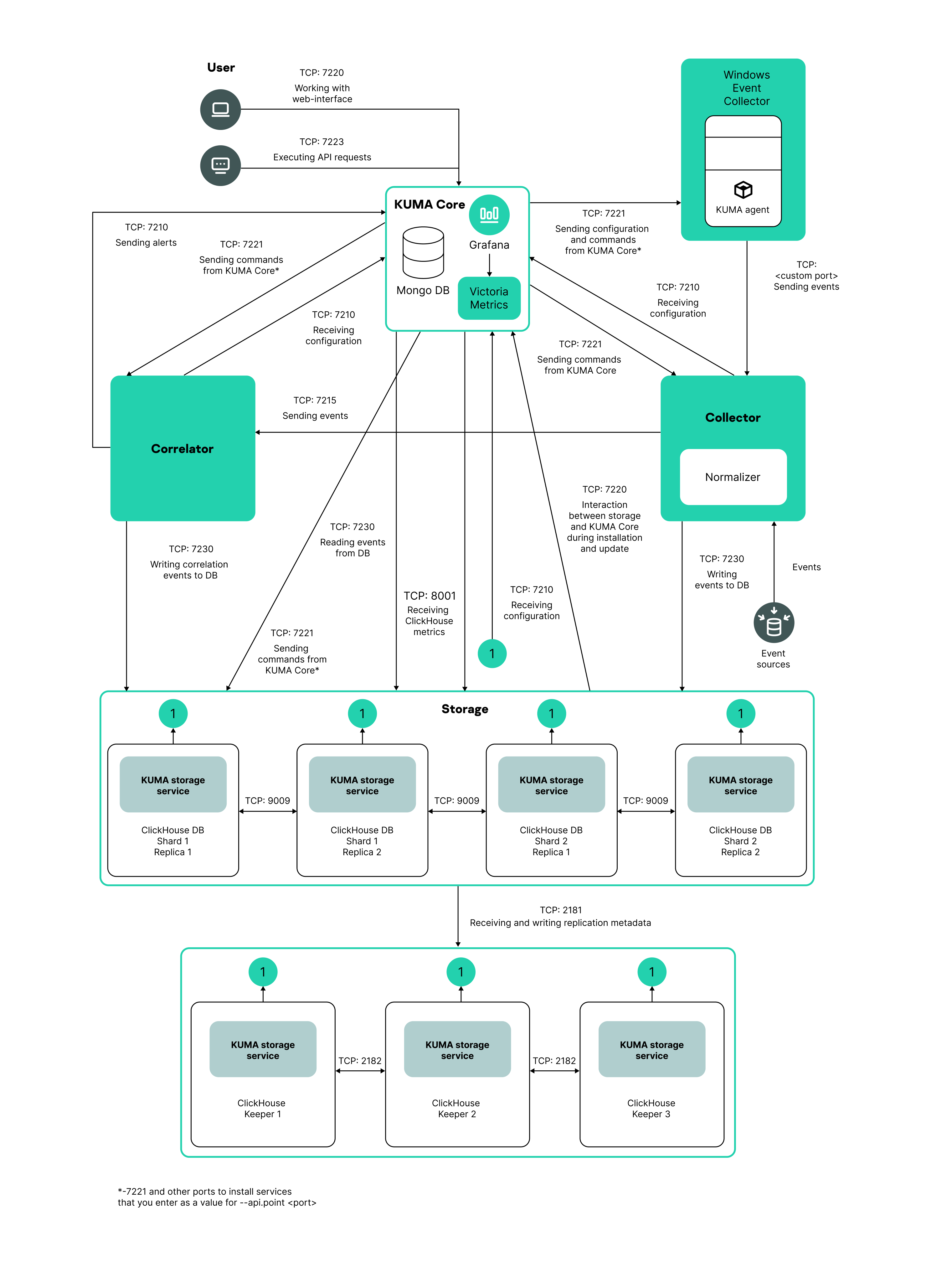

Distributed Installation diagram

Distributed installation diagram

Example inventory file for distributed installation

all:

vars:

deploy_to_k8s: false

need_transfer: false

generate_etc_hosts: false

deploy_example_services: false

no_firewall_actions: false

kuma:

vars:

ansible_connection: ssh

ansible_user: root

children:

kuma_core:

hosts:

kuma-core-1.example.com:

ip: 0.0.0.0

mongo_log_archives_number: 14

mongo_log_frequency_rotation: daily

mongo_log_file_size: 1G

kuma_collector:

hosts:

kuma-collector-1.example.com:

ip: 0.0.0.0

kuma_correlator:

hosts:

kuma-correlator-1.example.com:

ip: 0.0.0.0

kuma_storage:

hosts:

kuma-storage-cluster1-server1.example.com:

ip: 0.0.0.0

shard: 1

replica: 1

keeper: 0

kuma-storage-cluster1-server2.example.com:

ip: 0.0.0.0

shard: 1

replica: 2

keeper: 0

kuma-storage-cluster1-server3.example.com:

ip: 0.0.0.0

shard: 2

replica: 1

keeper: 0

kuma-storage-cluster1-server4.example.com:

ip: 0.0.0.0

shard: 2

replica: 2

keeper: 0

kuma-storage-cluster1-server5.example.com:

ip: 0.0.0.0

shard: 0

replica: 0

keeper: 1

kuma-storage-cluster1-server6.example.com:

ip: 0.0.0.0

shard: 0

replica: 0

keeper: 2

kuma-storage-cluster1-server7.example.com:

ip: 0.0.0.0

shard: 0

replica: 0

keeper: 3

You can install KUMA services on different servers; you can describe the configuration for a distributed installation in the distributed.inventory.yml inventory file.

- Distributed installation in a high availability configuration

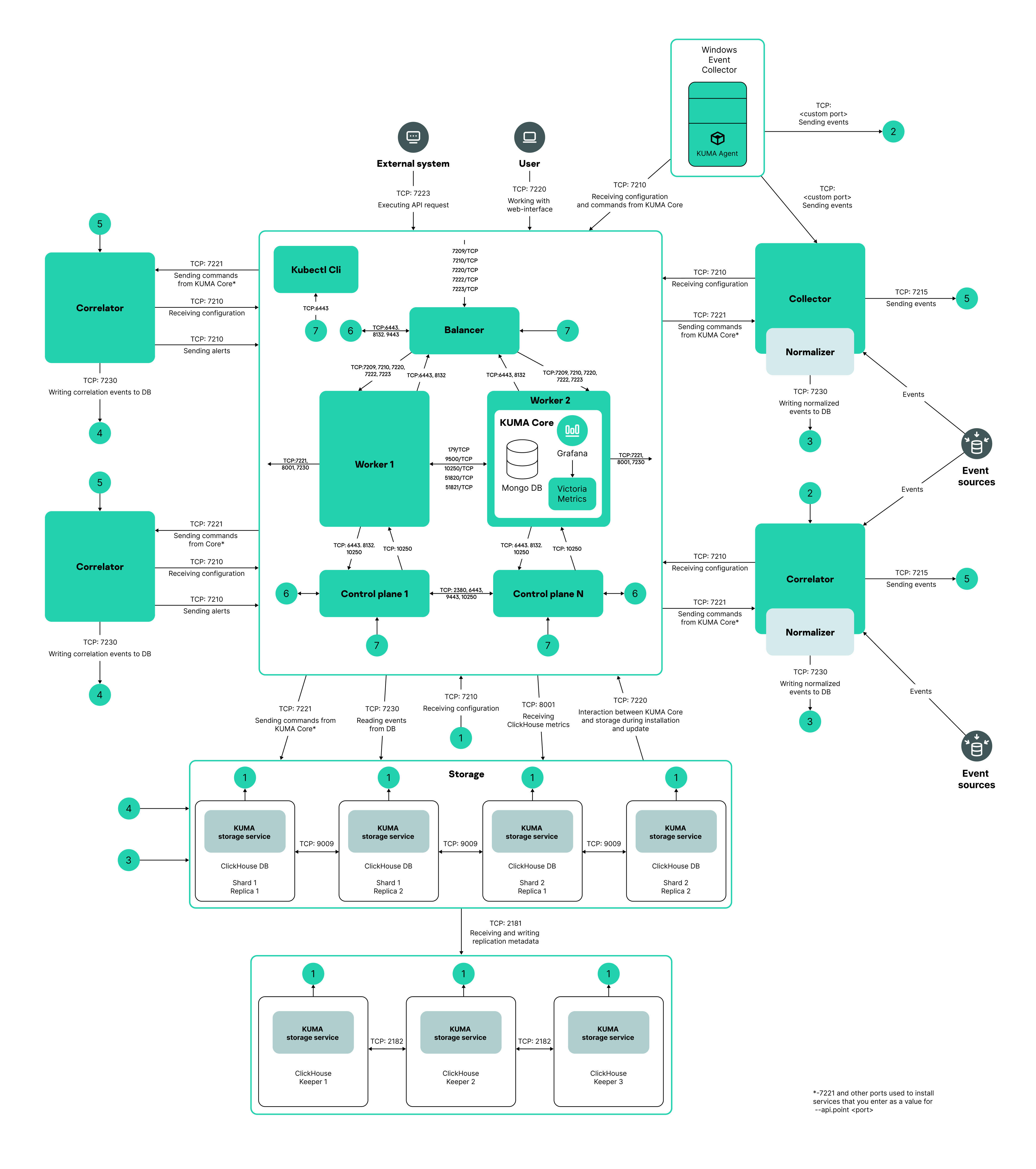

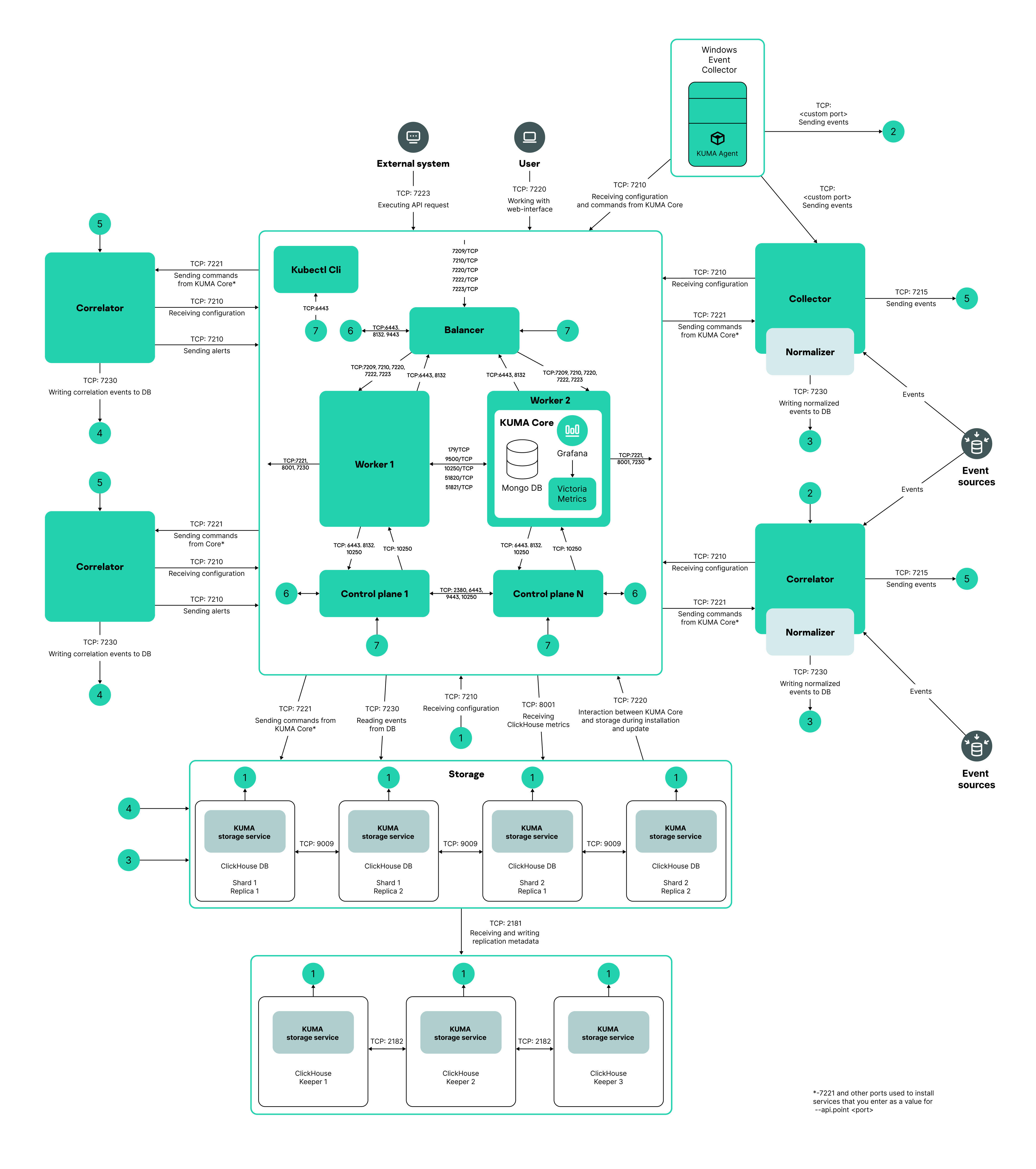

Diagram of distributed installation in a high availability configuration

Distributed installation in a high availability configuration

Example inventory file for distributed installation in a high availability configuration

all:

vars:

deploy_to_k8s: true

need_transfer: true

generate_etc_hosts: false

airgap: true

deploy_example_services: false

no_firewall_actions: false

kuma:

vars:

ansible_connection: ssh

ansible_user: root

children:

kuma_core:

hosts:

kuma-core-1.example.com:

mongo_log_archives_number: 14

mongo_log_frequency_rotation: daily

mongo_log_file_size: 1G

kuma_collector:

hosts:

kuma-collector-1.example.com:

ip: 0.0.0.0

kuma-collector-2.example.com:

ip: 0.0.0.0

kuma_correlator:

hosts:

kuma-correlator-1.example.com:

ip: 0.0.0.0

kuma-correlator-2.example.com:

ip: 0.0.0.0

kuma_storage:

hosts:

kuma-storage-cluster1-server1.example.com:

ip: 0.0.0.0

shard: 1

replica: 1

keeper: 0

kuma-storage-cluster1-server2.example.com:

ip: 0.0.0.0

shard: 1

replica: 2

keeper: 0

kuma-storage-cluster1-server3.example.com:

ip: 0.0.0.0

shard: 2

replica: 1

keeper: 0

kuma-storage-cluster1-server4.example.com:

ip: 0.0.0.0

shard: 2

replica: 2

keeper: 0

kuma-storage-cluster1-server5.example.com:

ip: 0.0.0.0

shard: 0

replica: 0

keeper: 1

kuma-storage-cluster1-server6.example.com:

ip: 0.0.0.0

shard: 0

replica: 0

keeper: 2

kuma-storage-cluster1-server7.example.com:

ip: 0.0.0.0

shard: 0

replica: 0

keeper: 3

kuma_k0s:

vars:

ansible_connection: ssh

ansible_user: root

children:

kuma_lb:

hosts:

kuma_lb.example.com:

kuma_managed_lb: true

kuma_control_plane_master:

hosts:

kuma_cpm.example.com:

ansible_host: 10.0.1.10

kuma_control_plane_master_worker:

kuma_control_plane:

hosts:

kuma_cp1.example.com:

ansible_host: 10.0.1.11

kuma_cp2.example.com:

ansible_host: 10.0.1.12

kuma_control_plane_worker:

kuma_worker:

hosts:

kuma-core-1.example.com:

ansible_host: 10.0.1.13

extra_args: "--labels=kaspersky.com/kuma-core=true,kaspersky.com/kuma-ingress=true,node.longhorn.io/create-default-disk=true"

kuma_worker2.example.com:

ansible_host: 10.0.1.14

extra_args: "--labels=kaspersky.com/kuma-core=true,kaspersky.com/kuma-ingress=true,node.longhorn.io/create-default-disk=true"

You can install the KUMA Core on a Kubernetes cluster for high availability. Describe the configuration in the k0s.inventory.yml inventory file.

Page top

[Topic 217904]

Program installation requirements

General application installation requirements

You can install the application on the following operating systems:

- Oracle Linux

- Astra Linux

- Ubuntu

- RED OS

Supported operating system versions are listed in the Hardware and software requirements section.

Supported configurations are Server and Server with GUI support. In the Server with GUI support configuration, you do not need to install additional packages for reporting.

RED OS 8 is supported without high availability (HA). When using RED OS 8 in the Server with GUI support configuration, you need to install the iscsi-initiator-utils package, and then run the following commands:

systemctl enable iscsid

systemctl start iscsid

Before deploying the application, make sure the following conditions are met:

- Servers on which you want to install the components satisfy the hardware and software requirements.

- Ports to be used by the installed instance of KUMA are available.

- KUMA components are addressed using the fully qualified domain name (FQDN) of the host in the hostname.example.com format. Before you install the application, make sure that the correct host FQDN is returned in the

Static hostname field. To do so, run the following command:hostnamectl status

- The name of server on which you are running the installer is not

localhost or localhost.<domain>. - The name of the server on which you are installing KUMA Core does not start with a numeral.

- Time synchronization over Network Time Protocol (NTP) is configured on all servers with KUMA services.

Installation requirements for Oracle Linux, Astra Linux, Ubuntu 22.04 LTS, and RED OS 7.3.4 and 8

|

Oracle Linux

|

Astra Linux

|

Ubuntu 22.04 LTS

|

RED OS 7.3.4 and 8

|

Python version

|

3.6 to 3.11. Versions 3.12 and later are not supported.

|

3.6 to 3.11. Versions 3.12 and later are not supported.

|

3.6 to 3.11. Versions 3.12 and later are not supported.

|

3.6 to 3.11. Versions 3.12 and later are not supported.

|

SELinux module

|

Disabled

|

Disabled

|

Disabled

|

Disabled

|

Package manager

|

pip3

|

pip3

|

pip3

|

pip3

|

Basic packages

|

More on upgrading from Oracle Linux 8.x to Oracle Linux 9.x.

To install the packages, run the following commands:

pip3 install netaddr

yum install firewalld

yum install compat-openssl11

|

To install the packages, run the following command:

apt install python3-apt curl libcurl4

|

- python3-apt

- curl

- libcurl4

- openssl 1.1.1

- acl

To install the packages, run the following command:

apt install python3-apt curl libcurl4 acl

You can download the openssl1.1.1 package from the official website of Ubuntu and install it using the following command:

dpkg -i libssl1.1_1.1.1f-1ubuntu2_amd64.deb

|

To install the packages, run the following commands:

pip3 install netaddr

dnf install firewalld

dnf install compat-openssl11

|

Dependent packages

|

No value.

|

- netaddr

- python3-cffi-backend

To install the packages, run the following command:

apt install python3-netaddr python3-cffi-backend

If you plan to query Oracle DB databases from KUMA, you need to install the Astra Linux libaio1 package.

|

- netaddr

- python3-cffi-backend

To install the packages, run the following command:

apt install python3-netaddr python3-cffi-backend

|

No value.

|

Packages that must be installed on a device with the KUMA Core for correct generation and downloading of reports

|

- nss

- gtk2

- atk

- libnss3.so

- libatk-1.0.so.0

- libxkbcommon

- libdrm

- at-spi2-atk

- mesa-libgbm

- alsa-lib

- cups-libs

- libXcomposite

- libXdamage

- libXrandr

To install the packages, run the following command:

yum install nss gtk2 atk libnss3.so libatk-1.0.so.0 libxkbcommon libdrm at-spi2-atk mesa-libgbm alsa-lib cups-libs libXcomposite libXdamage libXrandr

|

- libgtk2.0.0

- libnss3

- libatk-adaptor

- libdrm-common

- libgbm1

- libxkbcommon0

- libasound2

To install the packages, run the following command:

apt install libgtk2.0.0 libnss3 libatk-adaptor libdrm-common libgbm1 libxkbcommon0 libasound2

|

- libatk1.0-0

- libgtk2.0-0

- libatk-bridge2.0-0

- libcups2

- libxcomposite-dev

- libxdamage1

- libxrandr2

- libgbm-dev

- libxkbcommon-x11-0

- libpangocairo-1.0-0

- libasound2

To install the packages, run the following command:

apt install libatk1.0-0 libgtk2.0-0 libatk-bridge2.0-0 libcups2 libxcomposite-dev libxdamage1 libxrandr2 libgbm-dev libxkbcommon-x11-0 libpangocairo-1.0-0 libasound2

|

- nss

- gtk2

- atk

- libnss3.so

- libatk-1.0.so.0

- libxkbcommon

- libdrm

- at-spi2-atk

- mesa-libgbm

- alsa-lib

- cups-libs

- libXcomposite

- libXdamage

- libXrandr

To install the packages, run the following command:

dnf install nss gtk2 atk libnss3.so libatk-1.0.so.0 libxkbcommon libdrm at-spi2-atk mesa-libgbm alsa-lib cups-libs libXcomposite libXdamage libXrandr

|

User permissions required to install the application

|

No value.

|

You need to assign the required permissions to the user that will be installing the application:

sudo pdpl-user -i 63 <user that will be installing the application>

|

No value.

|

No value.

|

[Topic 231034]

Upgrading from Oracle Linux 8.x to Oracle Linux 9.x

To upgrade from Oracle Linux 8.x to Oracle Linux 9.x:

- Run the following commands to disable KUMA services on the hosts where the services are installed:

sudo systemctl disable kuma-collector-<service ID>.servicesudo systemctl disable kuma-correlator-<service ID>.servicesudo systemctl disable kuma-storage-<service ID>.servicesudo systemctl disable kuma-grafana.servicesudo systemctl disable kuma-mongodb.servicesudo systemctl disable kuma-victoria-metrics.servicesudo systemctl disable kuma-vmalert.servicesudo systemctl disable kuma-core.service

- Upgrade the OS on every host.

- After the upgrade is complete, run the following command to install the compat-openssl11 package on the host where you want to deploy the KUMA Core outside of the cluster:

yum install compat-openssl11

- Run the following commands to enable the services on the hosts where the services are installed:

sudo systemctl enable kuma-core.servicesudo systemctl enable kuma-storage-<service ID>.servicesudo systemctl enable kuma-collector-<service ID>.servicesudo systemctl enable kuma-correlator-<service ID>.servicesudo systemctl enable kuma-grafana.servicesudo systemctl enable kuma-mongodb.servicesudo systemctl enable kuma-victoria-metrics.servicesudo systemctl enable kuma-vmalert.service

- Restart the hosts.

As a result, the upgrade is completed.

Page top

[Topic 267523]

Ports used by KUMA during installation

For the application to run correctly, you need to ensure that the KUMA components are able to interact with other components and applications over the network using the protocols and ports specified during the installation of the KUMA components.

Before installing the Core on a device, make sure that the following ports are available:

- 9090: used by Victoria Metrics.

- 8880: used by VMalert.

- 27017: used by MongoDB.

The table below lists the default ports. The installer automatically opens the ports during KUMA installation.

Network ports used for the interaction of KUMA components

Protocol

|

Port

|

Direction

|

Purpose of the connection

|

HTTPS

|

7222

|

From the KUMA client to the KUMA Core server.

|

Reverse proxy to the CyberTrace system.

|

HTTPS

|

8123

|

Local requests from the storage service to the local node of the ClickHouse cluster.

|

Writing and getting normalized events in the ClickHouse cluster.

|

HTTPS

|

8429

|

From the KUMA agent to the KUMA Core server.

|

Logging KUMA agent performance metrics.

|

HTTPS

|

9009

|

Between replicas of the ClickHouse cluster.

|

Internal data communication between replicas of the ClickHouse cluster.

|

TCP

|

2181

|

From ClickHouse cluster nodes to the ClickHouse keeper replication coordination service.

|

Getting and writing replication metadata by replicas of ClickHouse servers.

|

TCP

|

2182

|

From one ClickHouse keeper replication coordination service to another.

|

Internal communication between replication coordination services to reach a quorum.

|

TCP

|

7210

|

From all KUMA components to the KUMA Core server.

|

Getting the KUMA configuration from the KUMA Core server.

|

TCP

|

7220

|

- From the KUMA client to the server with the KUMA Core component.

- From storage hosts to the KUMA Core server during installation or upgrade.

|

- User access to the KUMA web interface.

- Interaction between the storage hosts and the KUMA Core during installation or upgrade. You can close this port after completing the installation or upgrade.

|

TCP

|

7221 and other ports used for service installation as the value of --api.port <port>

|

From KUMA Core to KUMA services.

|

Administration of services from the KUMA web interface.

|

TCP

|

7223

|

To the KUMA Core server.

|

Default port for API requests.

|

TCP

|

8001

|

From Victoria Metrics to the ClickHouse server.

|

Getting ClickHouse server operation metrics.

|

TCP

|

9000

|

- Outgoing and incoming connections between servers of the ClickHouse cluster.

- From the local client.sh client to the local cluster node.

|

Port of the ClickHouse native protocol (also called ClickHouse TCP).

Used by ClickHouse applications and processes, such as clickhouse-server, clickhouse-client, and native ClickHouse tools Used for inter-server communication for distributed queries. Also used for writing and getting data in the ClickHouse cluster.

|

Ports used by predefined OOTB resources

The installer automatically opens these ports during KUMA installation.

Ports used by predefined OOTB resources:

- 7230/tcp

- 7231/tcp

- 7232/tcp

- 7233/tcp

- 7234/tcp

- 7235/tcp

- 5140/tcp

- 5140/udp

- 5141/tcp

- 5144/udp

KUMA Core traffic in a high availability configuration

The table below lists the initiator (source) of the connection and the destination. The port number of the initiator can be dynamic. Return traffic within the established connection must not be blocked.

KUMA Core traffic in a high availability configuration

Source

|

Destination

|

Destination port

|

Type

|

External KUMA services

|

Load balancer

|

7209

|

TCP

|

External KUMA services

|

Load balancer

|

7210

|

TCP

|

External KUMA services

|

Load balancer

|

7220

|

TCP

|

External KUMA services

|

Load balancer

|

7222

|

TCP

|

External KUMA services

|

Load balancer

|

7223

|

TCP

|

KUMA agents

|

Load balancer

|

8429

|

TCP

|

Worker node

|

Load balancer

|

6443

|

TCP

|

Worker node

|

Load balancer

|

8132

|

TCP

|

Control node

|

Load balancer

|

6443

|

TCP

|

Control node

|

Load balancer

|

8132

|

TCP

|

Control node

|

Load balancer

|

9443

|

TCP

|

Worker node

|

External KUMA services

|

Depending on the settings specified when creating the service.

|

TCP

|

Load balancer

|

Worker node

|

7209

|

TCP

|

Load balancer

|

Worker node

|

7210

|

TCP

|

Load balancer

|

Worker node

|

7220

|

TCP

|

Load balancer

|

Worker node

|

7222

|

TCP

|

Load balancer

|

Worker node

|

7223

|

TCP

|

Load balancer

|

Worker node

|

8429

|

TCP

|

External KUMA services

|

Worker node

|

7209

|

TCP

|

External KUMA services

|

Worker node

|

7210

|

TCP

|

External KUMA services

|

Worker node

|

7220

|

TCP

|

External KUMA services

|

Worker node

|

7222

|

TCP

|

External KUMA services

|

Worker node

|

7223

|

TCP

|

KUMA agents

|

Worker node

|

8429

|

TCP

|

Worker node

|

Worker node

|

179

|

TCP

|

Worker node

|

Worker node

|

9500

|

TCP

|

Worker node

|

Worker node

|

10250

|

TCP

|

Worker node

|

Worker node

|

51820

|

UDP

|

Worker node

|

Worker node

|

51821

|

UDP

|

Control node

|

Worker node

|

10250

|

TCP

|

Load balancer

|

Control node

|

6443

|

TCP

|

Load balancer

|

Control node

|

8132

|

TCP

|

Load balancer

|

Control node

|

9443

|

TCP

|

Worker node

|

Control node

|

6443

|

TCP

|

Worker node

|

Control node

|

8132

|

TCP

|

Worker node

|

Control node

|

10250

|

TCP

|

Control node

|

Control node

|

2380

|

TCP

|

Control node

|

Control node

|

6443

|

TCP

|

Control node

|

Control node

|

9443

|

TCP

|

Control node

|

Control node

|

10250

|

TCP

|

Cluster management console (CLI)

|

Load balancer

|

6443

|

TCP

|

Cluster management console (CLI)

|

Control node

|

6443

|

TCP

|

[Topic 217770]

Downloading CA certificates

In the KUMA web interface, you can download the following CA certificates:

- REST API CA certificate

This certificate is used to authenticate the API server serving the KUMA public API. You can also use this certificate when importing data from MaxPatrol reports.

You can also change this certificate if you want to use your company's certificate and key instead of the self-signed certificate of the web console.

- Microservice CA certificate

This certificate is used for authentication when connecting log sources to passive collectors using TLS, but without specifying your own certificate.

To download a CA certificate:

- Open the KUMA web interface.

- In the lower left corner of the window, click the name of the user account, and in the menu, click the REST API CA certificate or Microservice CA certificate button, depending on the certificate that you want to download.

The certificate is saved to the download directory configured in your browser.

Page top

[Topic 294030]

Reissuing internal CA certificates

The storage location of the self-signed CA certificate and the certificate reissue mechanism have been changed.

The certificate is stored in the database. The previous method of reissuing internal certificates by deleting certificates from the file system of the Core and restarting the Core is no longer allowed. The old method will cause the Core to fail to start. Do not connect new services to the Core until the certificate is successfully reissued.

After reissuing the internal CA certificates in the Settings → General → Reissue internal CA certificates section of the KUMA web interface, you must stop the services, delete the old certificates from the directories of the service, and manually restart all services. Only users with the General Administrator role can reissue internal CA certificates.

The Reissue internal CA certificates option is available only to a user with the General Administrator role.

The process of reissuing certificates for an individual service remains the same: in the KUMA web interface, in the Resources → Active services section, select the service; in the context menu, select Reset certificate, and delete the old certificate from the service installation directory. KUMA automatically generates a new certificate. You do not need to restart running services, the new certificate is applied automatically. A stopped service must be restarted to have the certificate applied.

To reissue internal CA certificates:

- In the KUMA web interface, go to the Settings → General section, click Reissue internal CA certificates, and read the displayed warning. If you decide to proceed with reissuing certificates, click Yes.

As a result, the CA certificates for KUMA services and the CA certificate for ClickHouse are reissued. Next, you must stop the services, delete old certificates from the service installation directories, restart the Core, and restart the stopped services to apply the reissued certificates.

- Connect to the hosts where the collector, correlator, and event router services are deployed.

- Stop all services with the following command:

sudo systemctl stop kuma-<collector/correlator/eventRouter>-<service ID>.service

- Delete the internal.cert and internal.key certificate files from the "/opt/kaspersky/kuma/<

service type>/<service ID>/certificates" directories with the following command:sudo rm -f /opt/kaspersky/kuma/<service type>/<service ID>/certificates/internal.cert

sudo rm -f /opt/kaspersky/kuma/<service type>/<service ID>/certificates/internal.key

- Connect to the hosts where storage services are deployed.

- Stop all storage services.

sudo systemctl stop kuma-<storage>-<service ID>.service

- Delete the internal.cert and internal.key certificate files from the "/opt/kaspersky/kuma/storage/<

ID service>/certificates" directories. sudo rm -f /opt/kaspersky/kuma/storage/<service ID>/certificates/internal.cert

sudo rm -f /opt/kaspersky/kuma/storage/<service ID>/certificates/internal.key

- Delete all ClickHouse certificates from the "/opt/kaspersky/kuma/clickhouse/certificates" directory.

sudo rm -f /opt/kaspersky/kuma/clickhouse/certificates/internal.cert

sudo rm -f /opt/kaspersky/kuma/clickhouse/certificates/internal.key

- Connect to the hosts where agent services are deployed.

- Stop the services of Windows agents and Linux agents.

- Delete the internal.cert and internal.key certificate files from the working directories of the agents.

- Start the Core to apply the new CA certificates.

- For an "all-in-one" or distributed installation of KUMA, run the following command:

sudo systemctl restart kuma-core-00000000-0000-0000-0000-000000000000.service

- For KUMA in a high availability configuration, to restart the Core, run the following command on the primary controller:

sudo k0s kubectl rollout restart deployment/core-deployment -n kuma

You do not need to restart victoria-metrics.

The Core must be restarted using the command because restarting the Core in the KUMA interface affects only the Core container and not the entire pod.

- Restart all services that were stopped as part of the procedure.

sudo systemctl start kuma-<collector/correlator/eventRouter/storage>-<service ID>.service

- Restart victoria-metrics.

sudo systemctl start kuma-victoria-metrics.service

Internal CA certificates are reissued and applied.

Page top

[Topic 275543]

Modifying the self-signed web console certificate

You can use your company's certificate and key instead of the self-signed certificate of the web console. For example, if you want to replace the self-signed CA certificate of the Core with a certificate issued by your corporate CA, you must provide an external.cert and an unencrypted external.key in PEM format.

The following example shows how to replace a self-signed CA certificate of the Core with your corporate certificate in PFX format. You can use the instructions as an example and adapt the steps according to your needs.

To replace the certificate of the KUMA web console with an external certificate:

- If you are using a certificate and key in a PFX container, use OpenSSL to convert the PFX file to a certificate and encrypted key in PEM format:

openssl pkcs12 -in kumaWebIssuedByCorporateCA.pfx -nokeys -out external.cert

openssl pkcs12 -in kumaWebIssuedByCorporateCA.pfx -nocerts -nodes -out external.key

Enter the password of the PFX key when prompted (Enter Import Password).

The command creates the external.cert certificate and the external.key key in PEM format.

- In the KUMA web interface, go to the Settings → Common → Core settings section under External TLS pair, click Upload certificate and Upload key and upload the external.cert file and the unencrypted external.key file in PEM format.

- Restart KUMA:

systemctl restart kuma-core

- Refresh the web page or restart the browser that you are using to manage the KUMA web interface.

Your company certificate and key are replaced.

Page top

[Topic 217747]

Synchronizing time on servers

To configure time synchronization on servers:

- Install chrony:

sudo apt install chrony

- Configure the synchronization of system time with an NTP server:

- Make sure the virtual machine has internet access.

If the virtual machine has internet access, go to step b.

If the virtual machine does not have internet access, edit the /etc/chrony.conf file to replace 2.pool.ntp.org with the name or IP address of your corporate NTP server.

- Start the system time synchronization service:

sudo systemctl enable --now chronyd

- Wait a few seconds and run the following command:

sudo timedatectl | grep 'System clock synchronized'

If the system time is synchronized correctly, the output will contain "System clock synchronized: yes".

Synchronization is configured.

Page top

[Topic 255123]

About the inventory file

You can install, update, or remove KUMA components by changing to the directory with the extracted kuma-ansible-installer and using the Ansible tool and a prepared inventory file. You can specify KUMA configuration settings in the inventory file; the installer then uses these settings when deploying, updating, and removing the application. The inventory file must conform to the YAML format.

You can create an inventory file based on the templates included in the distribution kit. The following templates are provided:

- single.inventory.yml.template can be used when installing KUMA on a single server. This template contains the minimum set of settings optimized for installation on a single device without using a Kubernetes cluster.

- distributed.inventory.yml.template can be used for the initial distributed installation of KUMA without using a Kubernetes cluster, for expanding an all-in-one installation to a distributed installation, and for updating KUMA.

- expand.inventory.yml.template can be used in some reconfiguration scenarios, such as adding collector and correlator servers, expanding an existing storage cluster, or adding a new storage cluster. If you use this inventory file to modify the configuration, the installer does not stop services in the entire infrastructure. If you reuse the inventory file, the installer can stop only services on hosts that are listed in the expand.inventory.yml file.

- k0s.inventory.yml.template can be used to install or migrate KUMA to a Kubernetes cluster.

We recommend saving a backup copy of the inventory file that you used to install the application. You can use it to add components to the system or remove KUMA.

Page top

[Topic 255188]

KUMA settings in the inventory file

The inventory file may include the following blocks:

For each host, you must specify the FQDN in the <host name>.<domain> format and, if necessary, an IPv4 or IPv6 address. The KUMA Core domain name and its subdomains may not start with a numeral.

Example:

hosts:

hostname.example.com:

ip: 0.0.0.0

|

The 'all' block

In this block, you can specify the variables that apply to all hosts listed in the inventory file, including the implicitly specified localhost on which the installation is started. Variables can be overridden at the level of host groups or individual hosts.

Example of overriding variables in the inventory file

all:

vars:

ansible_connection: ssh

deploy_to_k8s: False

need_transfer: False

airgap: True

deploy_example_services: True

kuma:

vars:

ansible_become: true

ansible_user: i.ivanov

ansible_become_method: su

ansible_ssh_private_key_file: ~/.ssh/id_rsa

children:

kuma_core:

vars:

ansible_user: p.petrov

ansible_become_method: sudo

The table below lists all possible variables in the vars section and their descriptions.

List of possible variables in the 'vars' section

Variable

|

Description

|

ansible_connection

|

Method used to connect to target machines.

Possible values:

ssh to connect to remote hosts over SSH.local to establish no connection with remote hosts.

|

ansible_user

|

User name used to connect to target machines and install components.

If root login is blocked on the target machines, choose a user that has the right to establish SSH connections and elevate privileges using su or sudo.

|

ansible_become

|

This variable specifies if you want to elevate the privileges of the user that is used to install KUMA components.

Possible values:

- You must specify

true if ansible_user is not root. false.

|

ansible_become_method

|

Method for elevating the privileges of the user that is used to install KUMA components.

You must specify su or sudo if ansible_user is not root.

|

ansible_ssh_private_key_file

|

Path to the private key in the /<path>/.ssh/id_rsa format. You must specify this variable if you want to use a key file other than the default key file (~/.ssh/id_rsa).

|

deploy_to_k8s

|

This variable specifies whether you want to deploy KUMA components in a Kubernetes cluster.

Possible values:

- The

false value is specified in the single.inventory.yml and distributed.inventory.yml templates. - The

true value is specified in the k0s.inventory.yml template.

If you do not specify this variable, it defaults to false.

|

need_transfer

|

This variable specifies whether you want to migrate KUMA Core to a new Kubernetes cluster.

You need to specify this variable only if deploy_to_k8s is true.

Possible values:

If you do not specify this variable, it defaults to false.

|

no_firewall_actions

|

This variable specifies whether the installer must perform the steps to configure the firewall on the hosts.

Possible values:

true means that at startup, the installer does not perform the steps to configure the firewall on the hosts.false means that at startup, the installer performs the steps to configure the firewall on the hosts. This is the value that is specified in all inventory file templates.

If you do not specify this variable, it defaults to false.

|

generate_etc_hosts

|

This variable specifies whether the machines must be registered in the DNS zone of your organization.

The installer automatically adds the IP addresses of the machines from the inventory file to the /etc/hosts files on the machines on which KUMA components are installed. The specified IP addresses must be unique.

Possible values:

If you do not specify this variable, it defaults to false.

|

deploy_example_services

|

This variable specifies whether predefined services are created during the installation of KUMA.

You need to specify this variable if you want to create demo services independently of the single/distributed/k0s inventory file.

Possible values:

false means predefined services are not created when installing KUMA. This is the value that is specified in all inventory file templates.true means predefined services are created when installing KUMA.

If you do not specify this variable, it defaults to false.

|

low_resources

|

This variable specifies whether KUMA is being installed in an environment with limited computational resources.

This variable is not specified in any of the inventory file templates.

Possible values:

false means KUMA is being installed for production use. In this case, the installer checks the requirements of the worker nodes (CPU, RAM, and free disk space) in accordance with the hardware and software requirements. If the requirements are not satisfied, the installation is aborted with an error message.true means that KUMA is being installed in an environment with limited computational resources. In this case, the minimum size of the KUMA Core installation directory on the host is 4 GB. All other computational resource limitations are ignored.

If you do not specify this variable, it defaults to false.

|

The 'kuma' block

In this block, you can specify the settings of KUMA components deployed outside of the Kubernetes cluster. The kuma block can contain the following sections:

varskuma block. children contains groups of settings for components:kuma_core contains settings of the KUMA Core. You can specify only one host and the following MongoDB database log rotation settings for the host:mongo_log_archives_number is the number of previous logs that you want to keep when rotating the MongoDB database log.mongo_log_file_size is the size of the MongoDB database log, in gigabytes, at which rotation begins. If the MongoDB database log never exceeds the specified size, no rotation occurs.mongo_log_frequency_rotation is the interval for checking the size of the MongoDB database log for rotation purposes. Possible values:hourly means the size of the MongoDB database log is checked every hour.daily means the size of the MongoDB database log is checked every day.weekly means the size of the MongoDB database log is checked every week.

The MongoDB database log is stored in the /opt/kaspersky/kuma/mongodb/log directory.

raft_node_addr is the FQDN on which you want raft to listen for signals from other nodes. This value must be specified in the <host FQDN>:<port> format. If this setting is not specified explicitly, <host FQDN> defaults to the FQDN of the host on which the KUMA Core is deployed, and <port> defaults to 7209. You can specify an address of your choosing to adapt the KUMA Core to the configuration of your infrastructure.

kuma_collector contains settings of KUMA collectors. You can specify multiple hosts.kuma_correlator contains settings of KUMA correlators. You can specify multiple hosts.kuma_storage contains settings of KUMA storage nodes. You can specify multiple hosts as well as shard, replica, and keeper IDs for hosts using the following settings:shard is the shard ID.replica is the replica ID.keeper is the keeper ID.

The specified shard, replica, and keeper IDs are used only if you are deploying demo services as part of a fresh KUMA installation. In other cases, the shard, replica, and keeper IDs that you specified in the KUMA web interface when creating a resource set for the storage are used.

The 'kuma_k0s' block

In this block, you can specify the settings of the Kubernetes cluster that ensures high availability of KUMA. This block is specified only in an inventory file based on k0s.inventory.yml.template.

For test and demo installations in environments with limited computational resources, you must also set low_resources: true in the all block. In this case, the minimum size of the KUMA Core installation directory is reduced to 4 GB and the limitations of other computational resources are ignored.

For each host in the kuma_k0s block, a unique FQDN and IP address must be specified in the ansible_host variable, except for the host in the kuma_lb section. For the host in the kuma_lb section, only the FQDN must be specified. Hosts must be unique within a group.

For a demo installation, you may combine a controller with a worker node. Such a configuration does not provide high availability of the KUMA Core and is only intended for demonstrating the functionality or for testing the software environment.

The minimal configuration that ensures high availability is 3 controllers, 2 worker nodes, and 1 nginx load balancer. In production, we recommend using dedicated worker nodes and controllers. If a cluster controller is under workload and the pod with the KUMA Core is hosted on the controller, if the controller goes down, access to the KUMA Core will be completely lost.

The kuma_k0s block can contain the following sections:

vars contains variables that apply to all hosts specified in the kuma block.сhildren contains settings of the Kubernetes cluster that provides high availability of KUMA.

The following table lists possible variables in the vars section and their descriptions.

List of possible variables in the vars section

|

|

|

Group of variables

|

Description

|

kuma_lb

|

FQDN of the load balancer. You can install the nginx load balancer or a third-party TCP load balancer.

If you are installing the nginx load balancer, you can set kuma_managed_lb=true to automatically configure the nginx load balancer when installing KUMA, open the necessary network ports on the nginx load balancer host (6443, 8132, 9443, 7209, 7210, 7220, 7222, 7223, 7226, 8429), and restart to apply the changes.

If you are installing a third-party TCP load balancer, you must manually configure it before installing KUMA.

|

kuma_control_plane_master

|

The host that acts as the primary controller of the cluster.

|

Groups for specifying the primary controller. You only need to specify a host for one group.

|

kuma_control_plane_master_worker

|

A host that combines the role of the primary controller and a worker node of the cluster. For each cluster controller that is combined with a worker node, in the inventory file, you must specify extra_args: "--labels=kaspersky.com/kuma-core=true,kaspersky.com/kuma-ingress=true,node.longhorn.io/create-default-disk=true".

|

kuma_control_plane

|

Hosts that act as controllers in the cluster.

|

Groups for specifying secondary controllers.

|

kuma_control_plane_worker

|

Hosts that combine the roles of controller and worker node in the cluster. For each cluster controller that is combined with a worker node, in the inventory file, you must specify extra_args: "--labels=kaspersky.com/kuma-core=true,kaspersky.com/kuma-ingress=true,node.longhorn.io/create-default-disk=true".

|

kuma_worker

|

Worker nodes of the cluster. For each cluster controller that is combined with a worker node, in the inventory file, you must specify extra_args: "--labels=kaspersky.com/kuma-core=true,kaspersky.com/kuma-ingress=true,node.longhorn.io/create-default-disk=true".

|

ipAutodetectionMethod

|

If multiple network interfaces are being used on the worker nodes of the cluster at the same time, the ipAutodetectionMethod variable lets you specify a name mask of the network interface to be used for communication between the worker nodes in the cluster.

For example, if you want to use only network interfaces named ethN (where N is the number of the network interface) for communication between worker nodes of the cluster, you can specify the variable as follows:

kuma_k0s:

vars:

ip_autodetection_method: "interface=eth.*"

This makes the cluster use a network interface with a name that matches the eth.* mask.

If the network interface name on each worker node is the same, for example eth0, you can specify the variable without a mask:

kuma_k0s:

vars:

ip_autodetection_method: "interface=eth0"

For more information, please refer to the Calico Open Source documentation.

|

[Topic 244406][Topic 217908]

Preparing the single.inventory.yml inventory file

KUMA components can be installed, updated, and removed in the directory containing the extracted installer by using the Ansible tool and the user-created YML inventory file containing a list of the hosts of KUMA components and other settings. If you want to install all KUMA components on the same server, you must specify the same host for all components in the inventory file.

To create an inventory file for installation on a single server:

- Copy the archive with the

kuma-ansible-installer-<version>.tar.gz installer to the server and extract it using the following command (about 2 GB of disk space is required):sudo tar -xpf kuma-ansible-installer-<version name>.tar.gz

- Go to the KUMA installer directory by executing the following command:

cd kuma-ansible-installer

- Copy the single.inventory.yml.template and create an inventory file named single.inventory.yml:

cp single.inventory.yml.template single.inventory.yml

- Edit the settings in the single.inventory.yml inventory file.

If you want predefined services to be created during the installation, set deploy_example_services to true.

deploy_example_services: true

The predefined services will appear only as a result of the initial installation of KUMA. If you are upgrading the system using the same inventory file, the predefined services are not re-created.

- Replace all

kuma.example.com strings in the inventory file with the name of the host on which you want to install KUMA components.

The inventory file is created. Now you can use it to install KUMA on a single server.

We recommend backing up the inventory file that you used to install the program. You can use it to add components to the system or remove KUMA.

Example inventory file for installation on a single server

all:

vars:

deploy_to_k8s: false

need_transfer: false

generate_etc_hosts: false

deploy_example_services: true

no_firewall_actions: false

kuma:

vars:

ansible_connection: ssh

ansible_user: root

children:

kuma_core:

hosts:

kuma1.example.com:

mongo_log_archives_number: 14

mongo_log_frequency_rotation: daily

mongo_log_file_size: 1G

kuma_collector:

hosts:

kuma1.example.com

kuma_correlator:

hosts:

kuma1.example.com

kuma_storage:

hosts:

kuma1.example.com:

shard: 1

replica: 1

keeper: 1

[Topic 222158]

Installing the program on a single server

You can install all KUMA components on a single server using the Ansible tool and the single.inventory.yml inventory file.

To install Kuma on a single server:

- Download the kuma-ansible-installer-<

build number>.tar.gz KUMA distribution kit to the server and extract it. The archive is extracted into the kuma-ansibleinstaller directory. - Go to the directory with the extracted installer.

- Depending on the type of license activation that you are planning to use, do one of the following:

- If you want to activate your license with a file, place the file with the license key in <installer directory>/roles/kuma/files/.

The key file must be named license.key.

sudo cp <key file>.key <installer directory>/roles/kuma/files/license.key

- If you want to activate with a license code, go to the next step of the instructions.

Activation using a license code is available starting with KUMA 3.4. For earlier versions of KUMA, you must activate the license with a file.

- Run the following command to start the component installation with your prepared single.inventory.yml inventory file:

sudo ./install.sh single.inventory.yml

- Accept the terms of the End User License Agreement.

If you do not accept the terms and conditions of the End User License Agreement, the application cannot be installed.

Depending on the type of license activation, running the installer has one of the following results:

- If you want to activate the license using a file and have placed the file with the license key in "<installer directory>/roles/kuma/files/", running the installer with the "single.inventory.yml" inventory file installs KUMA Core, all services specified in the inventory file, and OOTB resources. If example_services=true is set in the inventory, demo services are installed.

- If you want to activate with a license code or provide a license file later, running the installer with the "single.inventory.yml" inventory file installs only KUMA Core.

To install the services, specify the license code on the command line. Then run the postinstall.sh installer with the "single.inventory.yml" inventory file.

sudo ./postinstall.sh single.inventory.yml

This creates the specified services. You can select the resources that you want to import from the repository.

- After the installation is complete, log in to the KUMA web interface and enter the address of the KUMA web interface in the address bar of your browser, then enter your credentials on the login page.

The address of the KUMA web interface is https://<FQDN of the host where KUMA is installed>:7220.

Default login credentials:

- login: admin

- password: mustB3Ch@ng3d!

After logging in for the first time, change the password of the admin account

All KUMA components are installed and you are logged in to the web interface.

We recommend saving a backup copy of the inventory file that you used to install the application. You can use this inventory file to add components to the system or remove KUMA.

You can expand the installation to a distributed installation.

Page top

[Topic 222159][Topic 217917]

Preparing the test machine

To prepare the control machine for installing KUMA:

- Ensure that hardware, software, and installation requirements of the application are met.

- Generate an SSH key for authentication on the SSH servers of the target machines:

sudo ssh-keygen -f /root/.ssh/id_rsa -N "" -C kuma-ansible-installer

If SSH root access is blocked on the control machine, generate an SSH key for authentication on the SSH servers of the target machines for a user from the sudo group:

If the user that you want to use does not have sudo rights, add the user to the sudo group:

usermod -aG sudo user

ssh-keygen -f /home/<name of the user from the sudo group>/.ssh/id_rsa -N "" -C kuma-ansible-installer

As a result, the key is generated and saved in the user's home directory. To make the key available during installation, you must specify the full path to the key in the inventory file, in the ansible_ssh_private_key_file setting.

- Make sure that the control machine has network access to all the target machines by host name and copy the SSH key to each target machine:

sudo ssh-copy-id -i /root/.ssh/id_rsa root@<host name of the control machine>

If SSH root access is blocked on the control machine and you want to use the SSH key from the home directory of the user from the sudo group, make sure that the control machine has network access to all target machines by host name and copy the SSH key to each target machine:

ssh-copy-id -i /home/<name of the user in the sudo group>/.ssh/id_rsa <name of the user in the sudo group>@<host name of the control machine>

- Copy the installer archive

kuma-ansible-installer-<version>.tar.gz to the control machine and extract it using the following command (approximately 2 GB of disk space is required):sudo tar -xpf kuma-ansible-installer-<version>.tar.gz

The control machine is prepared for installing KUMA.

Page top

[Topic 222083]

Preparing the target machine

To prepare the target machine for the installation of KUMA components:

- Ensure that hardware, software, and installation requirements are met.

- Specify the host name. We recommend specifying a FQDN. For example, kuma1.example.com.

Do not change the KUMA host name after installation: this will make it impossible to verify the authenticity of certificates and will disrupt the network communication between the application components.

- Register the target machine in your organization's DNS zone to allow host names to be resolved to IP addresses.

If your organization does not use a DNS server, you can use the /etc/hosts file for name resolution. The content of the files can be automatically generated for each target machine when installing KUMA.

- To get the hostname that you must specify when installing KUMA, run the following command and record the result:

hostname -f

The control machine must be able to access the target machine using this name.

The target machine is ready for the installation of KUMA components.

Page top

[Topic 217955]

Preparing the distributed.inventory.yml inventory file

To create the distributed.inventory.yml inventory file:

- Go to the KUMA installer folder by executing the following command:

cd kuma-ansible-installer

- Create an inventory file named distributed.inventory.yml by copying distributed.inventory.yml.template:

cp distributed.inventory.yml.template distributed.inventory.yml

- Edit the settings in the distributed.inventory.yml.

We recommend backing up the inventory file that you used to install the program. You can use it to add components to the system or remove KUMA.

Example inventory file for distributed installation

all:

vars:

deploy_to_k8s: false

need_transfer: false

generate_etc_hosts: false

deploy_example_services: false

no_firewall_actions: false

kuma:

vars:

ansible_connection: ssh

ansible_user: root

children:

kuma_core:

hosts:

kuma-core-1.example.com:

ip: 0.0.0.0

mongo_log_archives_number: 14

mongo_log_frequency_rotation: daily

mongo_log_file_size: 1G

kuma_collector:

hosts:

kuma-collector-1.example.com:

ip: 0.0.0.0

kuma_correlator:

hosts:

kuma-correlator-1.example.com:

ip: 0.0.0.0

kuma_storage:

hosts:

kuma-storage-cluster1-server1.example.com:

ip: 0.0.0.0

shard: 1

replica: 1

keeper: 0

kuma-storage-cluster1-server2.example.com:

ip: 0.0.0.0

shard: 1

replica: 2

keeper: 0

kuma-storage-cluster1-server3.example.com:

ip: 0.0.0.0

shard: 2

replica: 1

keeper: 0

kuma-storage-cluster1-server4.example.com:

ip: 0.0.0.0

shard: 2

replica: 2

keeper: 0

kuma-storage-cluster1-server5.example.com:

ip: 0.0.0.0

shard: 0

replica: 0

keeper: 1

kuma-storage-cluster1-server6.example.com:

ip: 0.0.0.0

shard: 0

replica: 0

keeper: 2

kuma-storage-cluster1-server7.example.com:

ip: 0.0.0.0

shard: 0

replica: 0

keeper: 3

[Topic 222085]

Installing the program in a distributed configuration

KUMA is installed using the Ansible tool and a YML inventory file. The installation is performed from the control machine, and all of the KUMA components are installed on target machines.

To install KUMA:

- On the control machine, go to the directory containing the extracted installer.

cd kuma-ansible-installer

- Depending on the type of license activation that you plan to use, do one of the following:

- From the directory with the extracted installer, start the installation of components using the prepared inventory file, distributed.inventory.yml:

sudo ./install.sh distributed.inventory.yml

- Accept the terms and conditions of the End User License Agreement.

If you do not accept the terms and conditions of the End User License Agreement, the application cannot be installed.

Depending on the type of license activation, the installer produces one of the following results:

- If you want to activate the license using a file and have placed the file with the license key in "<installer directory>/roles/kuma/files/", running the installer with the "distributed.inventory.yml" inventory file installs KUMA Core, all services specified in the inventory file, and OOTB resources.

- If you want to activate with a license code or provide a license file later, running the installer with the "distributed.inventory.yml" inventory file installs only KUMA Core.

To install the services, specify the license code on the command line. Then run the postinstall.sh installer with the "distrtibuter.inventory.yml" inventory file.

sudo ./postinstall.sh distributed.inventory.yml

This creates the specified services. You can select the resources that you want to import from the repository.

- After the installation is complete, log in to the KUMA web interface and enter the address of the KUMA web interface in the address bar of your browser, then enter your credentials on the login page.

The address of the KUMA web interface is https://<FQDN of the host where KUMA is installed>:7220.

Default login credentials:

- login: admin

- password: mustB3Ch@ng3d!

After logging in for the first time, change the password of the admin account

All KUMA components are installed and you are logged in to the web interface.

We recommend saving a backup copy of the inventory file that you used to install the application. You can use this inventory file to add components to the system or remove KUMA.

Page top

[Topic 217914]

Distributed installation in a high availability configuration

The high availability configuration of KUMA involves deploying the KUMA Core on a Kubernetes cluster and using an external TCP traffic balancer.

To create a high availability KUMA installation, use the kuma-ansible-installer-ha-<build number>.tar.gz installer and prepare the k0s.inventory.yml inventory file by specifying the configuration of your cluster. For a new installation in a high availability configuration, OOTB resources are always imported. You can also perform an installation with deployment of demo services. To do this, set "deploy_example_services: true" in the inventory file.

You can deploy KUMA Core on a Kubernetes cluster in the following ways:

Minimum configuration

Kubernetes has 2 node roles:

- Controllers (control-plane). Nodes with this role manage the cluster, store metadata, and balance the workload.

- Workers (worker). Nodes with this role bear the workload by hosting KUMA processes.

To deploy KUMA in a high availability configuration, you need:

- 3 dedicated controllers

- 2 worker nodes

- 1 TCP balancer

You must not use the balancer as the control machine for running the KUMA installer.

To ensure the adequate performance of the KUMA Core in Kubernetes, you must allocate 3 dedicated nodes that have only the controller role. This will provide high availability for the Kubernetes cluster itself and will ensure that the workload (KUMA processes and other processes) cannot affect the tasks involved in managing the Kubernetes cluster. If you are using virtualization tools, make sure that the nodes are hosted on different physical servers and that these physical servers are not being used as worker nodes.

For a demo installation of KUMA, you may combine the controller and worker roles. However, if you are expanding an installation to a distributed installation, you must reinstall the entire Kubernetes cluster and allocate 3 dedicated nodes with the controller role and at least 2 nodes with the worker role. KUMA cannot be upgraded to later versions if any of the nodes combine the controller and worker roles.

Page top

[Topic 244396]

Additional requirements for deploying KUMA Core in Kubernetes

If you plan to protect KUMA's network infrastructure using Kaspersky Endpoint Security for Linux, first install KUMA in the Kubernetes cluster and only then deploy Kaspersky Endpoint Security for Linux. When updating or removing KUMA, you must first stop Kaspersky Endpoint Security for Linux using the following command:

systemctl stop kesl

When you install KUMA in a high availability configuration, the following requirements must be met:

- General application installation requirements.

- The hosts that you plan to use for Kubernetes cluster nodes must not use IP addresses from the following Kubernetes ranges:

- serviceCIDR: 10.96.0.0/12

- podCIDR: 10.244.0.0/16

Traffic to proxy servers must excluded for the IP addresses from these ranges.

- Each host must have a unique ID (/etc/machine-id).

- The firewalld or uwf firewall management tool must be installed and enabled on the hosts for adding rules to iptables.

- The nginx load balancer must be installed and configured (for details, please refer to the nginx load balancer documentation). You can install the nginx load balancer using one of the following commands:

sudo yum install nginx (for Oracle Linux)sudo apt install nginx-full (for Astra Linux)sudo apt install nginx libnginx-mod-stream (for Ubuntu)sudo yum install nginx nginx-all-modules (for RED OS)

If you want the nginx load balancer to be configured automatically during the KUMA installation, install the nginx load balancer and allow SSH access to it in the same way as for the Kubernetes cluster hosts.

Example of an automatically created nginx configuration

The installer creates the /etc/nginx/kuma_nginx_lb.conf configuration file. An example of this file contents is provided below. The upstream sections are generated dynamically and contain the IP addresses of the Kubernetes cluster controllers (in the example, 10.0.0.2-4 in the upstream kubeAPI_backend, upstream konnectivity_backend, controllerJoinAPI_backend sections) and the IP addresses of the worker nodes (in the example 10.0.1.2-3), for which the inventory file contains the "kaspersky.com/kuma-ingress=true" value for the extra_args variable.

The "include /etc/nginx/kuma_nginx_lb.conf;" line must be added to the end of the /etc/nginx/nginx.conf file to apply the generated configuration file. If you have a large number of active services and users, you may need to increase the limit of open files in the nginx.conf settings.

Configuration file example:

# Ansible managed

#

# LB KUMA cluster

#

stream {

server {

listen 6443;

proxy_pass kubeAPI_backend;

}

server {

listen 8132;

proxy_pass konnectivity_backend;

}

server {

listen 9443;

proxy_pass controllerJoinAPI_backend;

}

server {

listen 7209;

proxy_pass kuma-core-hierarchy_backend;

proxy_timeout 86400s;

}

server {

listen 7210;

proxy_pass kuma-core-services_backend;

proxy_timeout 86400s;

}

server {

listen 7220;

proxy_pass kuma-core-ui_backend;

proxy_timeout 86400s;

}

server {

listen 7222;

proxy_pass kuma-core-cybertrace_backend;

proxy_timeout 86400s;

}

server {

listen 7223;

proxy_pass kuma-core-rest_backend;

proxy_timeout 86400s;

}

upstream kubeAPI_backend {

server 10.0.0.2:6443;

server 10.0.0.3:6443;

server 10.0.0.4:6443;

}

upstream konnectivity_backend {

server 10.0.0.2:8132;

server 10.0.0.3:8132;

server 10.0.0.4:8132;

}

upstream controllerJoinAPI_backend {

server 10.0.0.2:9443;

server 10.0.0.3:9443;

server 10.0.0.4:9443;

}

upstream kuma-core-hierarchy_backend {

server 10.0.1.2:7209;

server 10.0.1.3:7209;

}

upstream kuma-core-services_backend {

server 10.0.1.2:7210;

server 10.0.1.3:7210;

}

upstream kuma-core-ui_backend {

server 10.0.1.2:7220;

server 10.0.1.3:7220;

}

upstream kuma-core-cybertrace_backend {

server 10.0.1.2:7222;

server 10.0.1.3:7222;

}

upstream kuma-core-rest_backend {

server 10.0.1.2:7223;

server 10.0.1.3:7223;

}

worker_rlimit_nofile 1000000;

events {

worker_connections 20000;

}

# worker_rlimit_nofile is the limit on the number of open files (RLIMIT_NOFILE) for workers.This is used to raise the limit without restarting the main process.

# worker_connections is the maximum number of connections that a worker can open simultaneously.

- An access key from the device on which KUMA is installed must be added to the nginx load balancer server.

- On the nginx load balancer server, the SELinux module must be disabled in the operating system.

- The tar, systemctl packages are installed on the hosts.

During KUMA installation, the hosts are automatically checked to see if they meet the following hardware requirements:

- CPU cores (threads): 12 or more

- RAM: 22,528 MB or more

- Free disk space in the /opt partition: 1000 GB or more.

- For an installation from scratch, the /var/lib partition must have at least 32 GB of free space. If the cluster already has been installed on this node, the size of the required free space is reduced by the size of the /var/lib/k0s directory.

If these conditions are not satisfied, the installation is aborted. For a demo installation, you can disable the check of these conditions by setting low_resources: true in the inventory file.

Additional requirements when installing on Astra Linux or Ubuntu operating systems.

- Installing KUMA in a high availability configuration is supported for Astra Linux Special Edition RUSB.10015-01 (2022-1011SE17MD, update 1.7.2.UU.1). Kernel version 5.15.0.33 or later is required.

- The following packages must be installed on the machines intended for deploying a Kubernetes cluster:

- open-iscsi

- wireguard

- wireguard-tools

To install the packages, run the following command:

sudo apt install open-iscsi wireguard wireguard-tools

Additional requirements when installing on the Oracle Linux, RED OS, or Red Hat Enterprise Linux operating systems

The following packages must be installed on the machines intended for deploying the Kubernetes cluster:

- iscsi-initiator-utils

- wireguard-tools

Before installing the packages on Oracle Linux, you must add the EPEL repository as a source of packages using one of the following commands:

sudo yum install oracle-epel-release-el8 (for Oracle Linux 8)sudo yum install oracle-epel-release-el9 (for Oracle Linux 9)

To install the packages, run the following command:

sudo yum install iscsi-initiator-utils wireguard-tools

Page top

[Topic 244399]

Installing KUMA on a Kubernetes cluster from scratch

The distributed installation of KUMA involves several steps:

- Verifying that the hardware, software, and installation requirements for KUMA are satisfied.

- Preparing the control machine.

The control machine is used during the application installation process to extract and run the installer files.

- Preparing the target machines.

The program components are installed on the target machines.

- Preparing the k0s.inventory.yml inventory file.

Create an inventory file with a description of the network structure of program components. The installer uses this inventory file to deploy KUMA.

- Installing the program.

Install the application and log in to the web interface.

- Creating services.

Create the client part of the services in the KUMA web interface and install the server part of the services on the target machines.

Make sure the KUMA installation is complete before you install KUMA services. We recommend installing services in the following order: storage, collectors, correlators, agents.

When deploying several KUMA services on the same host, during installation, you must specify unique ports for each service using the --api.port <port> parameter.

If necessary, you can change the certificate of KUMA web console to use your company's certificate.

Page top

[Topic 269330]

Preparing the test machine

To prepare the control machine for installing KUMA:

- Ensure that hardware, software, and installation requirements of the application are met.

- Generate an SSH key for authentication on the SSH servers of the target machines:

sudo ssh-keygen -f /root/.ssh/id_rsa -N "" -C kuma-ansible-installer

If SSH root access is blocked on the control machine, generate an SSH key for authentication on the SSH servers of the target machines for a user from the sudo group:

If the user that you want to use does not have sudo rights, add the user to the sudo group:

usermod -aG sudo user

ssh-keygen -f /home/<name of the user from the sudo group>/.ssh/id_rsa -N "" -C kuma-ansible-installer

As a result, the key is generated and saved in the user's home directory. To make the key available during installation, you must specify the full path to the key in the inventory file, in the ansible_ssh_private_key_file setting.

- Make sure that the control machine has network access to all the target machines by host name and copy the SSH key to each target machine:

sudo ssh-copy-id -i /root/.ssh/id_rsa root@<host name of the control machine>

If SSH root access is blocked on the control machine and you want to use the SSH key from the home directory of the user from the sudo group, make sure that the control machine has network access to all target machines by host name and copy the SSH key to each target machine:

ssh-copy-id -i /home/<name of the user in the sudo group>/.ssh/id_rsa <name of the user in the sudo group>@<host name of the test machine>

- Copy the

kuma-ansible-installer-ha-<version number> .tar.gz installer archive to the control machine and extract it using the following command:sudo tar -xpf kuma-ansible-installer-ha-<version number>.tar.gz

The test machine is ready for the KUMA installation.

Page top

[Topic 269332]

Preparing the target machine

To prepare the target machine for the installation of KUMA components:

- Ensure that hardware, software, and installation requirements are met.

- Specify the host name. We recommend specifying a FQDN. For example, kuma1.example.com.

Do not change the KUMA host name after installation: this will make it impossible to verify the authenticity of certificates and will disrupt the network communication between the application components.

- Register the target machine in your organization's DNS zone to allow host names to be translated to IP addresses.

The option of using the /etc/hosts file is not available when the Core is deployed in Kubernetes.

- To get the hostname that you must specify when installing KUMA, run the following command and record the result:

hostname -f

The control machine must be able to access the target machine using this name.

The target machine is ready for the installation of KUMA components.

Page top

[Topic 269334]

Preparing the k0s.inventory.yml inventory file

Expand all | Collapse all

To create the k0s.inventory.yml inventory file:

- Go to the KUMA installer folder by executing the following command:

cd kuma-ansible-installer-ha

- Copy the k0s.inventory.yml.template file to create the expand.inventory.yml inventory file:

cp k0s.inventory.yml.template k0s.inventory.yml

- Edit the inventory file settings in k0s.inventory.yml.

Example inventory file for a distributed installation in a high availability configuration with 3 controllers, 2 worker nodes, and 1 balancer

all:

vars:

ansible_connection: ssh

ansible_user: root

deploy_to_k8s: true

need_transfer: false

generate_etc_hosts: false

deploy_example_services: false

kuma:

children:

kuma_core:

hosts:

kuma-core.example.com:

mongo_log_archives_number: 14

mongo_log_frequency_rotation: daily

mongo_log_file_size: 1G

kuma_collector:

hosts:

kuma-collector.example.com:

kuma_correlator:

hosts:

kuma-correlator.example.com:

kuma_storage:

hosts:

kuma-storage-cluster1.server1.example.com

kuma-storage-cluster1.server2.example.com

kuma-storage-cluster1.server3.example.com

kuma-storage-cluster1.server4.example.com

kuma-storage-cluster1.server5.example.com

kuma-storage-cluster1.server6.example.com

kuma-storage-cluster1.server7.example.com

kuma_k0s:

children:

kuma_lb:

hosts:

kuma-lb.example.com:

kuma_managed_lb: true

kuma_control_plane_master:

hosts:

kuma_cpm.example.com:

ansible_host: 10.0.1.10

kuma_control_plane_master_worker:

kuma_control_plane:

hosts:

kuma_cp2.example.com:

ansible_host: 10.0.1.11

kuma_cp3.example.com:

ansible_host: 10.0.1.12

kuma_control_plane_worker:

kuma_worker:

hosts:

kuma-w1.example.com:

ansible_host: 10.0.2.11

extra_args: "--labels=kaspersky.com/kuma-core=true,kaspersky.com/kuma-ingress=true,node.longhorn.io/create-default-disk=true"

kuma-w2.example.com:

ansible_host: 10.0.2.12

extra_args: "--labels=kaspersky.com/kuma-core=true,kaspersky.com/kuma-ingress=true,node.longhorn.io/create-default-disk=true"

For such a configuration, specify the parameters as follows: need_transfer: false, deploy_example_services: false; in the kuma_storage section, list the servers for the storage cluster. After the installation is complete, you can use the KUMA web interface to assign the shard, replica and keeper roles to the servers specified in the inventory.

Example inventory file for migrating the Core from a distributed installation to a Kubernetes cluster to ensure high availability

all:

vars:

ansible_connection: ssh

ansible_user: root

deploy_to_k8s: true

need_transfer: true

generate_etc_hosts: false

deploy_example_services: false

kuma:

children:

kuma_core:

hosts:

kuma-core.example.com:

mongo_log_archives_number: 14

mongo_log_frequency_rotation: daily

mongo_log_file_size: 1G

kuma_collector:

hosts:

kuma-collector.example.com:

kuma_correlator:

hosts:

kuma-correlator.example.com:

kuma_storage:

hosts:

kuma-storage-cluster1.server1.example.com

kuma-storage-cluster1.server2.example.com

kuma-storage-cluster1.server3.example.com

kuma-storage-cluster1.server4.example.com

kuma-storage-cluster1.server5.example.com

kuma-storage-cluster1.server6.example.com

kuma-storage-cluster1.server7.example.com

kuma_k0s:

children:

kuma_lb:

hosts:

kuma-lb.example.com:

kuma_managed_lb: true

kuma_control_plane_master:

hosts:

kuma_cpm.example.com:

ansible_host: 10.0.1.10

kuma_control_plane_master_worker:

kuma_control_plane:

hosts:

kuma_cp2.example.com:

ansible_host: 10.0.1.11

kuma_cp3.example.com:

ansible_host: 10.0.1.12

kuma_control_plane_worker:

kuma_worker:

hosts:

kuma-w1.example.com:

ansible_host: 10.0.2.11

extra_args: "--labels=kaspersky.com/kuma-core=true,kaspersky.com/kuma-ingress=true,node.longhorn.io/create-default-disk=true"

kuma-w2.example.com:

ansible_host: 10.0.2.12

extra_args: "--labels=kaspersky.com/kuma-core=true,kaspersky.com/kuma-ingress=true,node.longhorn.io/create-default-disk=true"

The kuma_core, kuma_collector, kuma_correlator, kuma_storage sections of your k0s.inventory.yml inventory file must contain the same hosts that were used in the distributed.inventory.yml file when KUMA was upgraded from version 2.1.3 to version 3.0.3 and then to version 3.2, or when a new installation was performed. In the k0s.inventory.yml inventory file, set deploy_to_k8s: true, need_transfer: true, deploy_example_services: false.

We recommend backing up the inventory file that you used to install the program. You can use it to add components to the system or remove KUMA.

Page top

[Topic 269310]

Installing the program in a high availability configuration