Service monitoring

You can use the following functionality to monitor the status of all services except cold storage and agent:

- Viewing Victoria Metrics alerts

Users with the General administrator role can configure thresholds for KUMA services, and if a specified threshold is exceeded, the following changes take place:

- KUMA logs an audit event: VictoriaMetrics alert registered for service.

- KUMA sends a notification email message to the General administrator.

- Services are displayed in the Active services section with a yellow status. If you hover over the status icon, the error message will be displayed.

- View VictoriaMetrics metrics if the user has a role with metrics access rights.

The following examples show how to monitor service status.

- If the collector service has a yellow status in the Active services section and you see the Enrichment errors increasing message, you can:

- Go to Metrics → <service type> → <service name> → Enrichment → Errors section of KUMA for the service with the yellow status, find out which enrichment is causing errors, and view the chart to find out when the problem started and how it evolved.

- The likely cause of the enrichment errors may be DNS server unavailability or CyberTrace enrichment errors, therefore you can check your DNS or CyberTrace connection settings.

- If the collector service has a yellow status in the Active services section and you see the Output Event Loss increasing message, you can:

- Go to the Metrics → <service type> → <service name> → IO → Output Event Loss section of KUMA for the service with the yellow status and view the chart to find out when the problem started and how it evolved.

- The likely cause of the enrichment errors may be a buffer overflow or unavailability of destination, therefore you can check the availability and the connection of the destination or find out why the buffer capacity is exceeded.

Configuring service monitoring

To configure the services:

- In the KUMA web console, go to the Settings → Service monitoring section.

- Specify the values of monitoring parameters for the services.

Service monitoring does not apply to cold storage.

If you specify an invalid value that does not fit the range or format, the value is reset to the previously configured value.

- Click Save.

After saving the parameters, KUMA registers an audit event: Monitoring thresholds changed for the service.

KUMA monitors the status of services in accordance with the specified parameters.

In the Active services section, you can filter services by statuses or enter a word from the error text, for example, "QPS" or "buffer", in the search field and press ENTER. This results in a list of services with errors. Special characters ", },{, are not allowed in the search string and will produce irrelevant results.

Disabling service monitoring

To disable service monitoring:

- In the KUMA web console, go to the Settings → Service monitoring section.

- If you want to disable service monitoring only for collectors, in the Service monitoring. Thresholds setting window, under Collectors, select the Disable connector errors check box.

This disables only the analysis of the Connector errors metric for collectors.

- If you want to disable monitoring for all services, in the Service monitoring. Thresholds setting window, select the Disable check box.

KUMA service monitoring is disabled, and services do not get the yellow status.

Viewing KUMA metrics

To monitor the performance of its components, the event stream, and the correlation context, KUMA collects and stores a large number of parameters. The VictoriaMetrics time series database is used to collect, store and analyze the parameters. The collected metrics are visualized using Grafana. Dashboards that visualize key performance parameters of various KUMA components can be found in the KUMA → Metrics section.

The KUMA Core service configures VictoriaMetrics and Grafana automatically, no user action is required.

The collected metrics are visualized using the Grafana solution. The RPM package of the 'kuma-core' service generates the Grafana configuration and creates a separate dashboard for visualizing the metrics of each service. Graphs in the Metrics section appear with a delay of approximately 1.5 minutes.

For full information about the metrics, you can refer to the Metrics section of the KUMA web interface. Selecting this section opens the Grafana portal that is deployed as part of Core installation and is updated automatically. If the Metrics section shows "core:<port number>", this means that KUMA is deployed in a high availability configuration and the metrics were received from the host on which the KUMA Core was installed. In other configurations, the name of the host from which KUMA receives metrics is displayed.

Collector metrics

Metric name |

Description |

|---|---|

IO—metrics related to the service input and output. |

|

Processing EPS |

The number of events processed per second. |

Output EPS |

The number of events per second sent to the destination. |

Output Latency |

The time in milliseconds that passed while sending an event packet and receiving a response from the destination. The median value is displayed. |

Output Errors |

The number of errors occurring per second while event packets were sent to the destination. Network errors and errors writing to the disk buffer of the destination are displayed separately. |

Output Event Loss |

The number of events lost per second. Events can be lost due to network errors or errors writing the disk buffer of the destination. Events are also lost if the destination responds with an error code, for example, in case of an invalid request. |

Output Disk Buffer SIze |

The size of the disk buffer of the collector associated with the destination, in bytes. If a zero value is displayed, no event packets have been placed in the collector's disk buffer and the service is operating correctly. |

Write Network BPS |

The number of bytes received into the network per second. |

Connector errors |

The number of errors in the connector logs. |

Normalization—metrics related to the normalizers. |

|

Raw & Normalized event size |

The size of the raw event and size of the normalized event. The median value is displayed. |

Errors |

The number of normalization errors per second. |

Filtration—metrics related to filters. |

|

EPS |

The number of events per second matching the filter conditions and sent for processing. The collector only processes events that match the filtering criteria if the user has added the filter to the configuration of the collector service. |

Aggregation—metrics related to the aggregation rules. |

|

EPS |

The number of events received and generated by the aggregation rule per second. This metric helps determine the effectiveness of aggregation rules. |

Buckets |

The number of buckets in the aggregation rule. |

Enrichment—metrics related to enrichment rules. |

|

Cache RPS |

The number of requests per second to the local cache. |

Source RPS |

The number of requests per second to an enrichment source, such as a dictionary. |

Source Latency |

Time in milliseconds passed while sending a request to the enrichment source and receiving a response from it. The median value is displayed. |

Queue |

The size of the enrichment request queue. This metric helps to find bottleneck enrichment rules. |

Errors |

The number of errors per second while sending requests to the enrichment source. |

Correlator metrics

Metric name |

Description |

|---|---|

IO—metrics related to the service input and output. |

|

Processing EPS |

The number of events processed per second. |

Output EPS |

The number of events per second sent to the destination. |

Output Latency |

The time in milliseconds that passed while sending an event packet and receiving a response from the destination. The median value is displayed. |

Output Errors |

The number of errors occurring per second while event packets were sent to the destination. Network errors and errors writing to the disk buffer of the destination are displayed separately. |

Output Event Loss |

The number of events lost per second. Events can be lost due to network errors or errors writing the disk buffer of the destination. Events are also lost if the destination responds with an error code, for example, in case of an invalid request. |

Output Disk Buffer SIze |

The size of the disk buffer of the collector associated with the destination, in bytes. If a zero value is displayed, no event packets have been placed in the collector's disk buffer and the service is operating correctly. |

Correlation—metrics related to correlation rules. |

|

EPS |

The number of correlation events per second generated by the correlation rule. |

Buckets |

The number of buckers in a correlation rule of the standard type. |

Rate Limiter Hits |

The number of times the correlation rule exceeded the rate limit per second. |

Active Lists OPS |

The number of operations requests per second sent to the active list, and the operations themselves. |

Active Lists Records |

The number of records in the active list. |

Active Lists On-Disk Size |

The size of the active list on the disk, in bytes. |

Enrichment—metrics related to enrichment rules. |

|

Cache RPS |

The number of requests per second to the local cache. |

Source RPS |

The number of requests per second to an enrichment source, such as a dictionary. |

Source Latency |

Time in milliseconds passed while sending a request to the enrichment source and receiving a response from it. The median value is displayed. |

Queue |

The size of the enrichment request queue. This metric helps to find bottleneck enrichment rules. |

Errors |

The number of errors per second while sending requests to the enrichment source. |

Response—metrics associated with response rules. |

|

RPS |

The number of times a response rule was activated per second. |

Storage metrics

Metric name |

Description |

|---|---|

Storage / IO — metrics related to the status of ClickHouse cluster nodes. |

|

Output EPS |

Number of events per second that were inserted into the ClickHouse node or into the disk buffer of the node. |

Output Latency |

Latency of inserting a batch either into the ClickHouse node or into the disk buffer of the node. |

Output Errors |

Number of errors per second when inserting a batch into the ClickHouse node or into the disk buffer of the node. |

Output Disk Buffer SIze |

Size of the disk buffer of the ClickHouse node. |

Output Event Loss |

Number of events that were permanently lost on each ClickHouse node. |

Batch size |

The size of the batch of events to be inserted into the ClickHouse node. |

Insert interval 5min Q1 |

Duration of the first quartile of the event insertion interval for the last 5 minutes. |

ClickHouse / General—metrics related to the general settings of the ClickHouse cluster. |

|

Active Queries |

The number of active queries sent to the ClickHouse cluster. This metric is displayed for each ClickHouse instance. |

QPS |

The number of queries per second sent to the ClickHouse cluster. |

Failed QPS |

The number of failed queries per second sent to the ClickHouse cluster. |

Allocated memory |

The amount of RAM, in gigabytes, allocated to the ClickHouse process. |

ClickHouse / Insert—metrics related to inserting events into a ClickHouse instance. |

|

Insert EPS |

The number of events per second inserted into the ClickHouse instance. |

Insert QPS |

The number of ClickHouse instance insert queries per second sent to the ClickHouse cluster. If the Insert QPS metric demonstrates a growing queue of queries and the metric is greater than 1, we recommend also looking at the Batch size metric value to adjust the storage buffering settings in the settings of the storage service configuration. Example: The Insert QPS metric is greater than 1 and equals 8. The Batch size metric is 1.2 GB (in bytes). In this case, you can find the buffer size by multiplying Insert QPS by Batch size: 8 * 1.2 = 9.6 GB. Round the resulting value of 9.6 and specify it in bytes (for example, 10000000000 bytes) as the Buffer size setting on the Advanced settings tab of the storage service configuration. Also specify a Buffer flush interval of 2 seconds. |

Failed Insert QPS |

The number of failed ClickHouse instance insert queries per second sent to the ClickHouse cluster. |

Delayed Insert QPS |

The number of delayed ClickHouse instance insert queries per second sent to the ClickHouse cluster. Queries were delayed by the ClickHouse node due to exceeding the soft limit on active merges. |

Rejected Insert QPS |

The number of rejected ClickHouse instance insert queries per second sent to the ClickHouse cluster. Queries were rejected by the ClickHouse node due to exceeding the hard limit on active merges. |

Active Merges |

The number of active merges. |

Distribution Queue |

The number of temporary files with events that could not be inserted into the ClickHouse instance because it was unavailable. These events cannot be found using search. |

ClickHouse / Select—metrics related to event selections in the ClickHouse instance. |

|

Select QPS |

The number of ClickHouse instance event select queries per second sent to the ClickHouse cluster. |

Failed Select QPS |

The number of failed ClickHouse instance event select queries per second sent to the ClickHouse cluster. |

ClickHouse / Replication—metrics related to replicas of ClickHouse nodes. |

|

Active Zookeeper Connections |

The number of active connections to the Zookeeper cluster nodes. In normal operation, this number should be equal to the number of nodes in the Zookeeper cluster. |

Read-only Replicas |

The number of read-only replicas of ClickHouse nodes. In normal operation, no such replicas of ClickHouse nodes must exist. |

Active Replication Fetches |

The number of active processes of downloading data from the ClickHouse node during data replication. |

Active Replication Sends |

The number of active processes of sending data to the ClickHouse node during data replication. |

Active Replication Consistency Checks |

The number of active data consistency checks on replicas of ClickHouse nodes during data replication. |

ClickHouse / Networking—metrics related to the network of the ClickHouse cluster. |

|

Active HTTP Connections |

The number of active connections to the HTTP server of the ClickHouse cluster. |

Active TCP Connections |

The number of active connections to the TCP server of the ClickHouse cluster. |

Active Interserver Connections |

The number of active service connections between ClickHouse nodes. |

KUMA Core metrics

Metric name |

Description |

|---|---|

Raft—metrics related to reading and updating the state of the KUMA Core. |

|

Lookup RPS |

Number of lookup procedure requests per second sent to the KUMA Core, and the procedures themselves. |

Lookup Latency |

Time in milliseconds spent running the lookup procedures, and the procedures themselves. The time is displayed for the 99th percentile of lookup procedures. One percent of lookup procedures may take longer to run. |

Propose RPS |

Number of Raft (SQLite) propose procedure requests per second sent to the KUMA, and the procedures themselves. |

Propose Latency |

Time in milliseconds spent running the Raft (SQLite) propose procedures, and the procedures themselves. The time is displayed for the 99th percentile of propose procedures. One percent of propose procedures may take longer to run. |

Data mining—metrics related to data collection and analysis |

|

Executing Rules |

Number of running schedulers for executing data collection and analysis requests. |

Queued Rules |

Number of schedulers for executing queued data collection and analysis requests. |

Execution Errors |

Number of errors that occurred when running the data collection and analysis scheduler. |

Execution Latency |

How long takes a scheduler to execute requests. |

Tasks—metrics related to monitoring the running of tasks on the KUMA Core |

|

Active tasks |

Number of tasks run per unit time. |

Task Execution latency |

Duration of running tasks in seconds. |

Errors |

Number of errors when running tasks. |

API—metrics related to API requests. |

|

RPS |

Number of API requests made to the KUMA Core per second. |

Latency |

Time in milliseconds spent processing a single API request to the KUMA Core. The median value is displayed. |

Errors |

Number of errors per second while sending API requests to the KUMA Core. |

Notification Feed—metrics related to user activity. |

|

Subscriptions |

Number of clients connected to the KUMA Core via SSE to receive server messages in real time. This number is normally equal to the number of clients that are using the KUMA web interface. |

Errors |

The number of errors per second while sending notifications to users. |

Schedulers—metrics related to KUMA Core tasks. |

|

Active |

The number of repeating active system tasks. The tasks created by the user are ignored. |

Latency |

The time in milliseconds spent running the task. The median value is displayed. |

Errors |

The number of errors that occurred per second while performing tasks. |

Alerts Queue |

Number of alerts in the queue for insertion into the database. |

KUMA agent metrics

Metric name |

Description |

|---|---|

IO—metrics related to the service input and output. |

|

Processing EPS |

The number of events processed per second. |

Output EPS |

The number of events per second sent to the destination. |

Output Latency |

The time in milliseconds that passed while sending an event packet and receiving a response from the destination. The median value is displayed. |

Output Errors |

The number of errors occurring per second while event packets were sent to the destination. Network errors and errors writing to the disk buffer of the destination are displayed separately. |

Output Event Loss |

The number of events lost per second. Events can be lost due to network errors or errors writing the disk buffer of the destination. Events are also lost if the destination responds with an error code, for example, in case of an invalid request. |

Output Disk Buffer SIze |

The size of the disk buffer of the collector associated with the destination, in bytes. If a zero value is displayed, no event packets have been placed in the collector's disk buffer and the service is operating correctly. |

Write Network BPS |

The number of bytes received into the network per second. |

Event routers metrics

Metric name |

Description |

|---|---|

IO—metrics related to the service input and output. |

|

Processing EPS |

The number of events processed per second. |

Output EPS |

The number of events per second sent to the destination. |

Output Latency |

The time in milliseconds that passed while sending an event packet and receiving a response from the destination. The median value is displayed. |

Output Errors |

The number of errors occurring per second while event packets were sent to the destination. Network errors and errors writing to the disk buffer of the destination are displayed separately. |

Output Event Loss |

The number of events lost per second. Events can be lost due to network errors or errors writing the disk buffer of the destination. Events are also lost if the destination responds with an error code, for example, in case of an invalid request. |

Output Disk Buffer SIze |

The size of the disk buffer of the collector associated with the destination, in bytes. If a zero value is displayed, no event packets have been placed in the collector's disk buffer and the service is operating correctly. |

Write Network BPS |

The number of bytes received into the network per second. |

Connector Errors |

The number of errors in the connector log. |

General metrics common for all services

Metric name |

Description |

|---|---|

Process—General process metrics. |

|

Memory |

RAM usage (RSS) in megabytes. |

DISK BPS |

The number of bytes read from or written to the disk per second. |

Network BPS |

The number of bytes received/transmitted over the network per second. |

Network Packet Loss |

The number of network packets lost per second. |

GC Latency |

The time, in milliseconds, spent executing a GO garbage collection cycle The median value is displayed. |

Goroutines |

The number of active goroutines. This number is different from the operating system's thread count. |

OS—metrics related to the operating system. |

|

Load |

Average load. |

CPU |

CPU load as a percentage. |

Memory |

RAM usage (RSS) as a percentage. |

Disk |

Disk space usage as a percentage. |

Metrics storage period

KUMA operation data is saved for 3 months by default. This storage period can be changed.

To change the storage period for KUMA metrics:

- Log in to the OS of the server where the KUMA Core is installed.

- In the file /etc/systemd/system/multi-user.target.wants/kuma-victoria-metrics.service, in the ExecStart parameter, edit the

--retentionPeriod=<metrics storage period, in months>flag by inserting the necessary period. For example,--retentionPeriod=4means that the metrics will be stored for 4 months. - Restart KUMA by running the following commands in sequence:

systemctl daemon-reloadsystemctl restart kuma-victoria-metrics

The storage period for metrics has been changed.

Page topKUMA metric alert triggering conditions

If the value of a KUMA metric for a service exceeds the threshold of the corresponding parameter configured in the Service monitoring section of KUMA, VictoriaMetrics sends an alert, and an error message is displayed in the status of that service.

Alerts are received from VictoriaMetrics at the following intervals:

- VictoriaMetrics collects information from KUMA services every 15 seconds.

- VictoriaMetrics updates alerts for KUMA services every minute.

- The KUMA Core service collects information from VictoriaMetrics every 15 seconds.

Thus, the total delay before a service status is updated is less than 2–3 minutes.

If you disabled the receipt of alerts from VictoriaMetrics, some KUMA services may still be displayed with a yellow status. This can happen in the following cases:

- For a storage service:

- If an alert was received in response to an API request in the /status parameter from ClickHouse

- If cold storage of the Storage service is not being monitored

- For a collector service: If an alert was received in response to an API request in the /status parameter.

- For a correlator service: If a response rule exists that requires the Advanced Responses module, but this module is not covered by the current license, or the license that covers this module has expired.

The table below provides information on which error messages may appear in the service status when an alert is received from VictoriaMetrics, and which metrics and parameters they are based on and in what way. For details on KUMA metrics that can trigger VictoriaMetrics alerts, see Viewing KUMA metrics.

For example, if the Active services table for a service displays a yellow status and the High distribution queue error message (the "Error message" column in the table below), you can view the information in the Enrichment widget, the Distribution Queue metric (the "KUMA metrics" column in the table below).

Description of error messages for KUMA services

Error message |

Configurable alert parameters |

KUMA metric |

Description |

|---|---|---|---|

|

QPS interval/window, minutes QPS Threshold |

Clickhouse / General → Failed QPS |

An error message is displayed if the Failed QPS metric exceeds the specified QPS Threshold value for the duration specified by the QPS interval/window, minutes parameter. For example, if 25 out of 100 requests from VictoriaMetrics to the service were unsuccessful, and the QPS Threshold is 0.2, the alert is calculated as follows: (25 / 100) * 100 > 0.2 * 100 25% > 20% Because the percentage of unsuccessful requests is greater than the specified threshold, an error message is displayed for the service. |

|

Failed insert QPS calculation interval/window, minutes Insert QPS threshold |

Clickhouse / Insert → Failed Insert QPS |

An error message is displayed if the Failed Insert QPS metric exceeds the specified QPS Insert Threshold value for the duration specified by the Failed Insert QPS calculation interval/window, minutes parameter. For example, if 25 out of 100 requests from VictoriaMetrics to the service were unsuccessful, and the QPS Insert Threshold is 0.2, the alert is calculated as follows: (25 / 100) * 100 > 0.2 * 100 25% > 20% Because the percentage of unsuccessful requests is greater than the specified threshold, an error message is displayed for the service. |

|

Distribution queue threshold Distribution queue calculation interval/window, minutes |

Clickhouse / Insert → Distribution Queue |

An error message is displayed if the Distribution Queue metric exceeds the specified Distribution queue threshold value for the duration specified by the Distribution queue calculation interval/window, minutes parameter. |

|

Free space on disk threshold |

OS → Disk |

An error message is displayed if the amount of free disk space (as a percentage) indicated by the Disk metric value is less than the value specified in the Free disk space threshold parameter. For example, an error message is displayed if the partition on which KUMA is installed takes up all the disk space. |

|

Free space on partition threshold |

OS → Disk |

An error message is displayed if the amount of free space (as a percentage) on the disk partition that KUMA is using is less than the value specified in the Free space on partition threshold parameter. For example, an error message is displayed in the following cases:

|

|

Output Event Loss |

IO → Output Event Loss |

An error message is displayed if the Output Event Loss metric has been increasing for one minute. You can enable or disable the display of this error message using the Output Event Loss parameter. |

|

Disk buffer increase interval/window, minutes |

IO → Output Disk Buffer SIze |

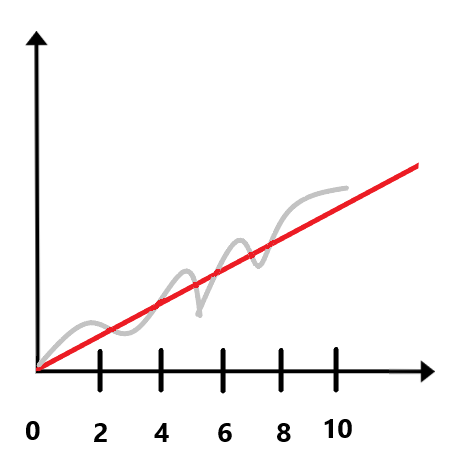

An error message is displayed if the Output Disk Buffer Size metric monotonically increases for 10 minutes with the sampling interval specified by the Disk buffer increase interval/window, minutes parameter. For example, if the Disk buffer increase interval/window, minutes is set to 2 minutes, an error message is displayed if the disk buffer size has monotonically increased for 10 minutes with a sampling interval of 2 minutes (see the figure below).

|

|

Growing enrichment queue interval/window, minutes |

Enrichment → Queue |

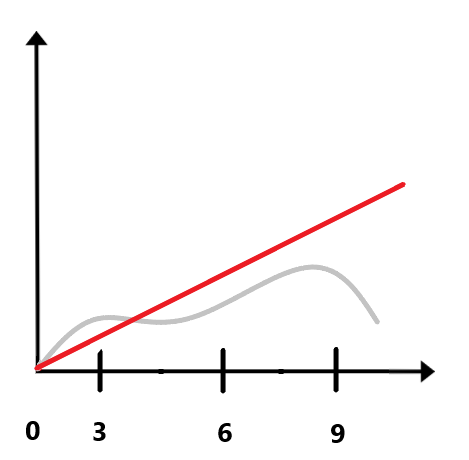

An error message is displayed if the Queue metric monotonically increases for 10 minutes with the sampling interval specified by the Growing enrichment queue interval/window, minutes parameter. For example, if the value of the Growing enrichment queue interval/window, minutes is 3, an error message is displayed if the enrichment queue has monotonically increased every 10 minutes with a sampling interval of 3 minutes. In the case shown in the figure below, the error message is not displayed because at the ninth minute the value of the metric decreased instead of increasing monotonically.

|

|

Enrichment errors |

Enrichment → Errors |

An error message is displayed if the Errors metric has been increasing for one minute. You can enable or disable the display of this error message using the Enrichment errors parameter. |

|

Disable connector errors |

IO → Connector Errors |

An error message is displayed if the Connector Errors metric has been increasing between consecutive polls of the metric by VictoriaMetrics for one minute. You can enable or disable the display of this error message using the Disable connector errors parameter. |