Contents

Creating a storage

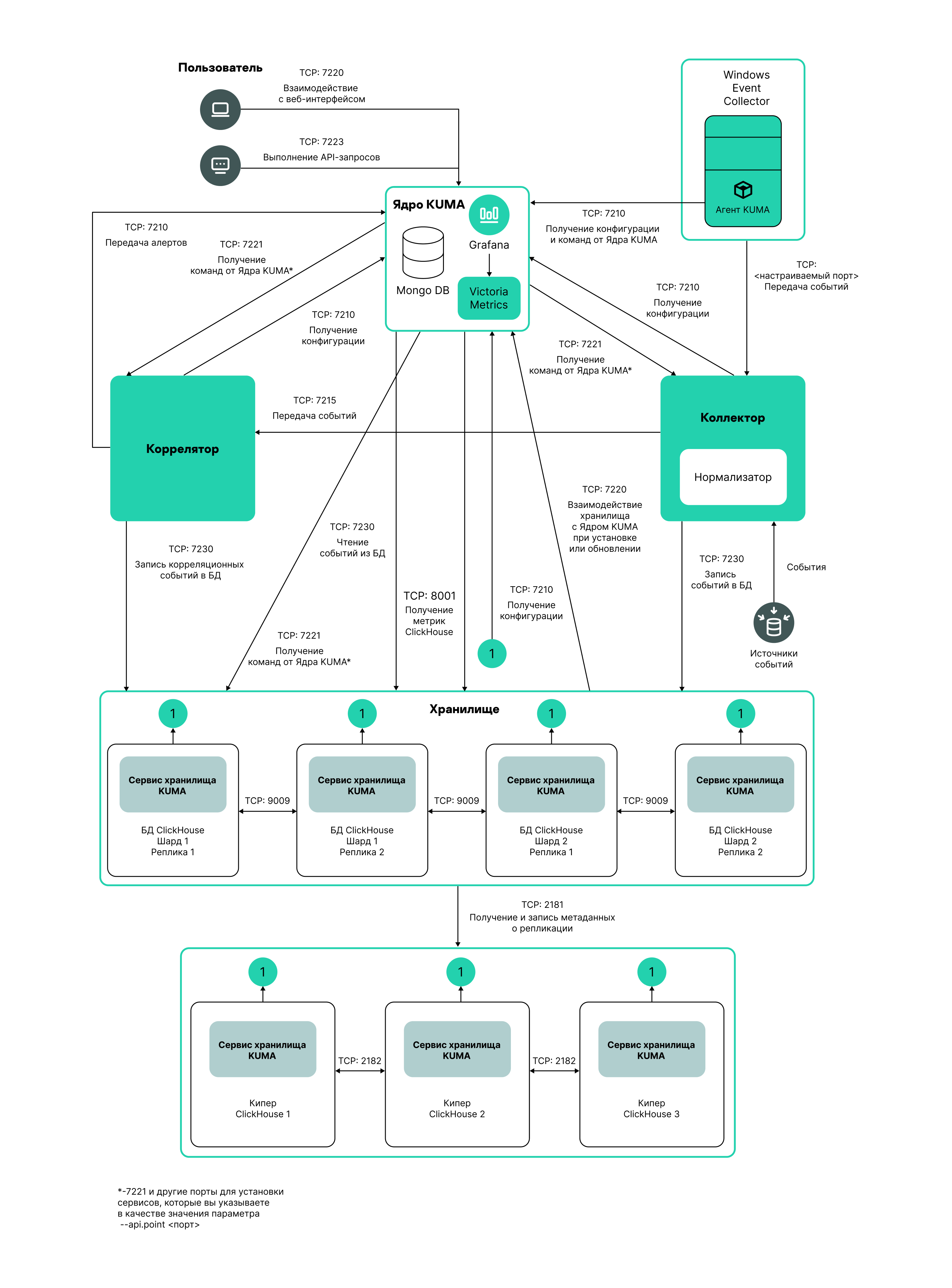

A storage consists of two parts: one part is created inside the KUMA Console, and the other part is installed on network infrastructure servers intended for storing events. The server part of a KUMA storage consists of ClickHouse nodes collected into a cluster. ClickHouse clusters can be supplemented with cold storage disks.

For each ClickHouse cluster, a separate storage must be installed.

Prior to storage creation, carefully plan the cluster structure and deploy the necessary network infrastructure. When choosing a ClickHouse cluster configuration, consider the specific event storage requirements of your organization.

It is recommended to use ext4 as the file system.

A storage is created in several steps:

- Creating a set of resources for a storage in the KUMA Console

- Creating a storage service in the KUMA Console

- Installing storage nodes in the network infrastructure

When creating storage cluster nodes, verify the network connectivity of the system and open the ports used by the components.

If the storage settings are changed, the service must be restarted.

ClickHouse cluster structure

A ClickHouse cluster is a logical group of devices that possess all accumulated normalized KUMA events. It consists of one or more logical shards.

A shard is a logical group of devices that possess a specific portion of all normalized events accumulated in the cluster. It consists of one or more replicas. Increasing the number of shards lets you do the following:

- Accumulate more events by increasing the total number of servers and disk space.

- Absorb a larger stream of events by distributing the load associated with an influx of new events.

- Reduce the time taken to search for events by distributing search zones among multiple devices.

A replica is a device that is a member of a logical shard and possesses a single copy of that shard's data. If multiple replicas exist, it means multiple copies exist (the data is replicated). Increasing the number of replicas lets you do the following:

- Improve high availability.

- Distribute the total load related to data searches among multiple machines (although it's best to increase the number of shards for this purpose).

A keeper is a device that participates in coordination of data replication at the whole cluster level. At least one device per cluster must have this role. The recommended number of the devices with this role is 3. The number of devices involved in coordinating replication must be an odd number. The keeper and replica roles can be combined in one machine.

Page topClickHouse cluster node settings

Prior to storage creation, carefully plan the cluster structure and deploy the necessary network infrastructure. When choosing a ClickHouse cluster configuration, consider the specific event storage requirements of your organization.

When creating ClickHouse cluster nodes, verify the network connectivity of the system and open the ports used by the components.

For each node of the ClickHouse cluster, you need to specify the following settings:

- Fully qualified domain name (FQDN)—a unique address to access the node. Specify the entire FQDN, for example,

kuma-storage.example.com. - Shard, replica, and keeper IDs—the combination of these settings determines the position of the node in the ClickHouse cluster structure and the node role.

Node roles

The roles of the nodes depend on the specified settings:

- shard, replica, keeper—the node participates in the accumulation and search of normalized KUMA events and helps coordinate data replication at the cluster-wide level.

- shard, replica—the node participates in the accumulation and search of normalized KUMA events.

- keeper—the node does not accumulate normalized events, but helps coordinate data replication at the cluster-wide level. Dedicated keepers must be specified at the beginning of the list in the Resources → Storages → <Storage> → Basic settings → ClickHouse cluster nodes section.

ID requirements:

- If multiple shards are created in the same cluster, the shard IDs must be unique within this cluster.

- If multiple replicas are created in the same shard, the replica IDs must be unique within this shard.

- The keeper IDs must be unique within the cluster.

Example of ClickHouse cluster node IDs:

- shard 1, replica 1, keeper 1;

- shard 1, replica 2;

- shard 2, replica 1;

- shard 2, replica 2, keeper 3;

- shard 2, replica 3;

- keeper 2.

Cold storage of events

In KUMA, you can configure the migration of legacy data from a ClickHouse cluster to the cold storage. Cold storage can be implemented using the local disks mounted in the operating system or the Hadoop Distributed File System (HDFS). Cold storage is enabled when at least one cold storage disk is specified. If you use several storages, on each node with data, mount a cold storage disk or HDFS disk in the directory that you specified in the storage configuration settings.If a cold storage disk is not configured and the server runs out of disk space in hot storage, the storage service is stopped. If both hot storage and cold storage are configured, and space runs out on the cold storage disk, the KUMA storage service is stopped. We recommend avoiding such situations by adding custom event storage conditions in hot storage.

Cold storage disks can be added or removed. If you have added multiple cold storage disks, data is written to them in a round-robin manner. If data to be written to disk would take up more space than is available on that disk, this data and all subsequent data is written round-robin to the next cold storage disks. If you added only two cold storage disks, the data is written to the drive that has free space left.

After changing the cold storage settings, the storage service must be restarted. If the service does not start, the reason is specified in the storage log.

If the cold storage disk specified in the storage settings has become unavailable (for example, out of order), this may lead to errors in the operation of the storage service. In this case, recreate a disk with the same path (for local disks) or the same address (for HDFS disks) and then delete it from the storage settings.

Rules for moving the data to the cold storage disks

You can configure the storage conditions for events in hot storage of the ClickHouse cluster by setting a limit based on retention time or on maximum storage size. When cold storage is used, every 15 minutses and after each Core restart, KUMA checks if the specified storage conditions are satisfied:

- KUMA gets the partitions for the storage being checked and groups the partitions by cold storage disks and spaces.

- For each space, KUMA checks whether the specified storage condition is satisfied.

- If the condition is satisfied (for example, if the space contains that exceed their retention time, or if the size of the storage has reached or exceeded limit specified in the condition), KUMA transfers all partitions with the oldest date to cold storage disks or deletes these partitions if no cold storage disk is configured or if it is configured incorrectly. This action is repeated while the configured storage condition remains satisfied in the space; for example, if after deleting partitions for a date, the storage size still exceeds the maximum size specified in the condition.

KUMA generates audit events when data transfer starts and ends, or when data is removed.

- If retention time is configured in KUMA, whenever partitions are transferred to cold storage disks, it is checked whether the configured conditions are satisfied on the disk. If events are found on the disk that have been stored for longer than the Event retention time, which is counted from the moment the events were received in KUMA, the solution deletes these events or all partitions for the oldest date.

KUMA generates audit events when it deletes data.

If the ClickHouse cluster disks are 95% full, the biggest partitions are automatically moved to the cold storage disks. This can happen more often than once per hour.

During data transfer, the storage service remains operational, and its status stays green in the Resources → Active services section of the KUMA web console. When you hover over the status icon, a message is displayed about the data transfer. When a cold storage disk is removed, the storage service has the yellow status.

Special considerations for storing and accessing events

- When using HDFS disks for cold storage, protect your data in one of the following ways:

- Configure a separate physical interface in the VLAN, where only HDFS disks and the ClickHouse cluster are located.

- Configure network segmentation and traffic filtering rules that exclude direct access to the HDFS disk or interception of traffic to the disk from ClickHouse.

- Events located in the ClickHouse cluster and on the cold storage disks are equally available in the KUMA web console. For example, when you search for events or view events related to alerts.

- You can disable the storage of events or audit events on cold storage disks. To do so, specify the following in storage settings:

- If you do not want to store events on cold storage disks, do one of the following:

- If in the Storage condition options field, you have a gigabyte or percentage based storage condition selected, in the Event retention time, specify

0. - If in the Storage condition options field, you have a storage condition in days, in the Event retention time field, specify the same number of days as in the Storage condition options field.

- If in the Storage condition options field, you have a gigabyte or percentage based storage condition selected, in the Event retention time, specify

- If you do not want to store audit events on cold storage disks, in the Cold storage period for audit events field, specify

0(days).

- If you do not want to store events on cold storage disks, do one of the following:

Special considerations for using HDFS disks

- Before connecting HDFS disks, create directories for each node of the ClickHouse cluster on them in the following format:

<HDFS disk host>/<shard ID>/<replica ID>. For example, if a cluster consists of two nodes containing two replicas of the same shard, the following directories must be created:- hdfs://hdfs-example-1:9000/clickhouse/1/1/

- hdfs://hdfs-example-1:9000/clickhouse/1/2/

Events from the ClickHouse cluster nodes are migrated to the directories with names containing the IDs of their shard and replica. If you change these node settings without creating a corresponding directory on the HDFS disk, events may be lost during migration.

- HDFS disks added to storage operate in the JBOD mode. This means that if one of the disks fails, access to the storage will be lost. When using HDFS, take high availability into account and configure RAID, as well as storage of data from different replicas on different devices.

- The speed of event recording to HDFS is usually lower than the speed of event recording to local disks. The speed of accessing events in HDFS, as a rule, is significantly lower than the speed of accessing events on local disks. When using local disks and HDFS disks at the same time, the data is written to them in turn.

- HDFS is used only as distributed file data storage of ClickHouse. Compression mechanisms of ClickHouse, not HDFS, are used to compress data.

- The ClickHouse server must have write access to the corresponding HDFS storage.

Removing cold storage disks

Before physically disconnecting cold storage disks, remove these disks from the storage settings.

To remove a disk from the storage settings:

- In the KUMA Console, under Resources → Storages, select the relevant storage.

This opens the storage.

- In the window, in the Disks for cold storage section, in the required disk's group of settings, click Delete disk.

Data from removed disk is automatically migrated to other cold storage disks or, if there are no such disks, to the ClickHouse cluster. While data is being migrated, the status icon of the storage turns yellow and an hourglass icon is displayed. Audit events are generated when data transfer starts and ends.

- After event migration is complete, the disk is automatically removed from the storage settings. It can now be safely disconnected.

Removed disks can still contain events. If you want to delete them, you can manually delete the data partitions using the DROP PARTITION command.

If the cold storage disk specified in the storage settings has become unavailable (for example, out of order), this may lead to errors in the operation of the storage service. In this case, create a disk with the same path (for local disks) or the same address (for HDFS disks) and then delete it from the storage settings.

Page topDetaching, archiving, and attaching partitions

If you want to optimize disk space and speed up queries in KUMA, you can detach data partitions in ClickHouse, archive partitions, or move partitions to a drive. If necessary, you can later reattach the partitions you need and perform data processing.

Detaching partitions

To detach partitions:

- Determine the shard on all replicas of which you want to detach the partition.

- Get the partition ID using the following command:

sudo /opt/kaspersky/kuma/clickhouse/bin/client.sh -d kuma --multiline --query "SELECT partition, name FROM system.parts;" |grep 20231130In this example, the command returns the partition ID for November 30, 2023.

- One each replica of the shard, detach the partition using the following command and specifying the partition ID:

sudo /opt/kaspersky/kuma/clickhouse/bin/client.sh -d kuma --multiline --query "ALTER TABLE events_local_v2 DETACH PARTITION ID '<partition ID>'"

As a result, the partition is detached on all replicas of the shard. Now you can move the data directory to a drive or archive the partition.

Archiving partitions

To archive detached partitions:

- Find the detached partition in disk subsystem of the server:

sudo find /opt/kaspersky/kuma/clickhouse/data/ -name <ID of the detached partition>\* Change to the 'detached' directory that contains the detached partition, and while in the 'detached' directory, perform the archival:sudo cd <path to the 'detached' directory containing the detached partition>sudo zip -9 -r detached.zip *For example:

sudo cd /opt/kaspersky/kuma/clickhouse/data/store/d5b/d5bdd8d8-e1eb-4968-95bd-d8d8e1eb3968/detached/sudo zip -9 -r detached.zip *

The partition is archived.

Attaching partitions

To attach archived partitions to KUMA:

- Increase the Retention period value.

KUMA deletes data based on the date specified in the Timestamp field, which records the time when the event is received, and based on the Retention period value that you set for the storage.

Before restoring archived data, make sure that the Retention period value overlaps the date in the Timestamp field. If this is not the case, the archived data will be deleted within 1 hour.

- Place the archive partition in the 'detached' section of your storage and unpack the archive:

sudounzip detached.zip -d<path to the 'detached' directory>For example:

sudounzip detached.zip -d/opt/kaspersky/kuma/clickhouse/data/store/d5b/d5bdd8d8-e1eb-4968-95bd-d8d8e1eb3968/detached/ - Run the command to attach the partition:

sudo /opt/kaspersky/kuma/clickhouse/bin/client.sh -d kuma --multiline --query "ALTER TABLE events_local_v2 ATTACH PARTITION ID '<partition ID>'"Repeat the steps of unpacking the archive and attaching the partition on each replica of the shard.

As a result, the archived partition is attached and its events are again available for search.

Page topCreating a set of resources for a storage

In the KUMA Console, a storage service is created based on the set of resources for the storage.

To create a set of resources for a storage in the KUMA Console:

- In the KUMA Console, under Resources → Storages, click Add storage.

This opens the Create storage window.

- On the Basic settings tab, in the Storage name field, enter a unique name for the service you are creating. The name must contain 1 to 128 Unicode characters.

- In the Tenant drop-down list, select the tenant that will own the storage.

- In the Tags drop-down list, select the tags for the resource set that you are creating.

The list includes all available tags created in the tenant of the resource and in the Shared tenant. You can find a tag in the list by typing its name in the field. If the tag you entered does not exist, you can press Enter or click Add to create it.

- You can optionally add up to 256 Unicode characters describing the service in the Description field.

- In the Storage condition options field, select an event storage condition in the ClickHouse cluster for the storage, which, when satisfied, will cause events to be transferred to cold storage disks or deleted if cold storage is not configured or is configured incorrectly. The condition is applied to the default space and to events from deleted spaces.

By default, ClickHouse moves events to cold storage disks or deletes them if more than 97% of the storage is full. KUMA also applies an additional 365 days storage condition when creating a storage. You can configure custom storage conditions for more stable performance of the storage.

To set the storage condition, do one of the following:

- If you want to limit the storage period for events, select Days from the drop-down list, and in the field, specify the maximum event storage period (in days) in the ClickHouse hot storage cluster.

After the specified period, events are automatically transferred to cold storage disks or deleted from the ClickHouse cluster, starting with the partitions with the oldest date. The minimum value is 1. The default value is 365.

- If you want to limit the maximum storage size, select GB from the drop-down list, and in the field, specify the maximum storage size in gigabytes.

When the storage reaches the specified size, events are automatically transferred to cold storage disks or deleted from the ClickHouse cluster, starting with the partitions with the oldest date. The minimum value and default value is 1.

- If you want to limit the storage size to a percentage of disk space that is available to the storage (according to VictoriaMetrics), select Percentage from the drop-down list, and in the field, specify the maximum storage size as a percentage of the available disk space. In this case, the condition can also be triggered when the disk space available to the storage is decreased.

When the storage reaches the specified percentage of disk space available to it, events are automatically transferred to cold storage disks or deleted from the ClickHouse cluster, starting with the partitions with the oldest date. Possible values: 1 to 95. The default value is 80. If you want to use percentages for all storage spaces, the sum total of percentages in the conditions of all spaces may not exceed 95, but we recommend specifying a limit of at most 90% for the entire storage or for individual spaces.

We do not recommend specifying small percentage values because this increases the probability of data loss in the storage.

For [OOTV] Storage, the default event storage period is 2 days. If you want to use this storage, you can change the event storage condition for it, if necessary.

- If you want to limit the storage period for events, select Days from the drop-down list, and in the field, specify the maximum event storage period (in days) in the ClickHouse hot storage cluster.

- If you want to use an additional storage condition, click Add storage condition and specify an additional storage condition as described in step 6.

The maximum number of conditions is two, and you can combine only conditions the following types:

- Days and storage size in GB

- Days and storage size as a percentage

If you want to delete a storage condition, click the X icon next to this condition.

- In the Audit retention period field, specify the period, in days, to store audit events. The minimum value and default value is

365. - If cold storage is required, specify the event storage term:

- Event retention time specifies the total KUMA event storage duration in days, counting from the moment when the event is received. When the specified period expires, events are automatically deleted from the cold storage disk. The default value is 0.

The event retention time is calculated as the sum of the event retention time in the ClickHouse hot storage cluster until the condition specified in the Storage condition options setting is triggered, and the event retention time on the cold storage disk. After one of storage conditions is triggered, the data partition for the earliest date is moved to the cold storage disk, and there it remains until the event retention time in KUMA expires.

Depending on the specified storage condition, the resulting retention time is as follows:

- If you specified a storage condition in days, the Event retention time must be strictly greater than the number of days specified in the storage condition. You can calculate the cold storage duration for events as the Event retention time minus the number of days specified in the Storage condition options setting.

If you do not want to store events on the cold storage disk, you can specify the same number of days in the Event retention time field as in the storage condition.

- If you specified the storage condition in terms of disk size (absolute or percentage), the minimum value of the Event retention time is 1. The cold storage duration for events is calculated as Event retention time minus the number of days from the receipt of the event to triggering of the condition and the disk partition filling up, but until the condition is triggered, calculating an exact duration is impossible. In this case, we recommend specifying a relatively large value for Event retention time to avoid events being deleted.

If you do not want to store events on the cold storage disk, you can set Event retention time to 0.

- If you specified a storage condition in days, the Event retention time must be strictly greater than the number of days specified in the storage condition. You can calculate the cold storage duration for events as the Event retention time minus the number of days specified in the Storage condition options setting.

- Audit cold retention period—the number of days to store audit events. The minimum value is 0.

The Event retention time and Audit cold retention period settings become available only after at least one cold storage disk has been added.

- Event retention time specifies the total KUMA event storage duration in days, counting from the moment when the event is received. When the specified period expires, events are automatically deleted from the cold storage disk. The default value is 0.

- If you want to change ClickHouse settings, in the ClickHouse configuration override field, paste the lines with settings from the ClickHouse configuration XML file /opt/kaspersky/kuma/clickhouse/cfg/config.xml. Specifying the root elements <yandex>, </yandex> is not required. Settings passed in this field are used instead of the default settings.

Example:

<merge_tree>

<parts_to_delay_insert>600</parts_to_delay_insert>

<parts_to_throw_insert>1100</parts_to_throw_insert>

</merge_tree>

- Use the Debug toggle switch to specify whether resource logging must be enabled. If you want to only log errors for all KUMA components, disable debugging. If you want to get detailed information in the logs, enable debugging.

- If necessary, in the ClickHouse cluster nodes section, add ClickHouse cluster nodes to the storage.

There can be multiple nodes. You can add nodes by clicking the Add node button or remove nodes by clicking the X icon of the relevant node.

Available settings:

- In the FQDN field, enter the fully qualified domain name of the node that you want to add. For example,

kuma-storage-cluster1-server1.example.com. - In the Shard ID, Replica ID, and Keeper ID fields, specify the role of the node in the ClickHouse cluster. The shard and keeper IDs must be unique within the cluster, the replica ID must be unique within the shard. The following example shows how to populate the ClickHouse cluster nodes section for a storage with dedicated keepers in a distributed installation. You can adapt the example to suit your needs.

Example:

ClickHouse cluster nodes

FQDN: kuma-storage-cluster1-server1.example.com

Shard ID: 0

Replica ID: 0

Keeper ID: 1

FQDN: kuma-storage-cluster1server2.example.com

Shard ID: 0

Replica ID: 0

Keeper ID: 2

FQDN: kuma-storage-cluster1server3.example.com

Shard ID: 0

Replica ID: 0

Keeper ID: 3

FQDN: kuma-storage-cluster1server4.example.com

Shard ID: 1

Replica ID: 1

Keeper ID: 0

FQDN: kuma-storage-cluster1server5.example.com

Shard ID: 1

Replica ID: 2

Keeper ID: 0

FQDN: kuma-storage-cluster1server6.example.com

Shard ID: 2

Replica ID: 1

Keeper ID: 0

FQDN: kuma-storage-cluster1server7.example.com

Shard ID: 2

Replica ID: 2

Keeper ID: 0

- In the FQDN field, enter the fully qualified domain name of the node that you want to add. For example,

- If necessary, in the Spaces section, add spaces to the storage to distribute the stored events.

There can be multiple spaces. You can add spaces by clicking the Add space button or remove spaces by clicking the X icon of the relevant space.

Available settings:

- In the Name field, specify a name for the space containing 1 to 128 Unicode characters.

- In the Storage condition options field, select an event storage condition in the ClickHouse cluster for the space, which, when satisfied, will cause events to be transferred to cold storage disks or deleted if cold storage is not configured or is configured incorrectly. KUMA applies the 365 days storage condition when a space is added.

To set the storage condition for a space, do one of the following:

- If you want to limit the storage period for events, select Days from the drop-down list, and in the field, specify the maximum event storage period (in days) in the ClickHouse hot storage cluster.

After the specified period, events are automatically transferred to cold storage disks or deleted from the ClickHouse cluster, starting with the partitions with the oldest date. The minimum value is 1. The default value is 365.

- If you want to limit the maximum storage space size, select GB from the drop-down list, and in the field, specify the maximum space size in gigabytes.

When the space reaches the specified size, events are automatically transferred to cold storage disks or deleted from the ClickHouse cluster, starting with the partitions with the oldest date. The minimum value and default value is 1.

- If you want to limit the space size to a percentage of disk space that is available to the storage (according to VictoriaMetrics), select Percentage from the drop-down list, and in the field, specify the maximum space size as a percentage of the size of the disk available to the storage. In this case, the condition can also be triggered when the disk space available to the storage is decreased.

When the space reaches the specified percentage of disk space available to the storage, events are automatically transferred to cold storage disks or deleted from the ClickHouse cluster, starting with the partitions with the oldest date. Possible values: 1 to 95. The default value is 80. If you want to use percentages for all storage spaces, the sum total of percentages in the conditions of all spaces may not exceed 95, but we recommend specifying a limit of at most 90% for the entire storage or for individual spaces.

We do not recommend specifying small percentage values because this increases the probability of data loss in the storage.

When using size as the storage condition, you must ensure that the total size of all spaces specified in the storage conditions does not exceed the physical size of the storage, otherwise an error will be displayed when starting the service.

In storage conditions with a size limitation, use the same units of measure for all spaces of a storage (only gigabytes or only percentage values). Otherwise, if the condition is specified as a percentage for one space, and in gigabytes for another space, the storage may overflow due to mismatch of values, leading to data loss.

- If you want to limit the storage period for events, select Days from the drop-down list, and in the field, specify the maximum event storage period (in days) in the ClickHouse hot storage cluster.

- If you want to make a space inactive if it is outdated and no longer relevant, select the Read only check box.

This prevents events from going into that space. To make the space active again, clear the Read only check box. This check box is cleared by default.

- If necessary, in the Event retention time field, specify the total KUMA event storage duration in days, counting from the moment when the event is received. When the specified period expires, events are automatically deleted from the cold storage disk. The default value is 0.

The event retention time is calculated as the sum of the event retention time in the ClickHouse hot storage cluster until the condition specified in the Storage condition options setting is triggered, and the event retention time on the cold storage disk. After one of storage conditions is triggered, the data partition for the earliest date is moved to the cold storage disk, and there it remains until the event retention time in KUMA expires.

Depending on the specified storage condition, the resulting retention time is as follows:

- If you specified a storage condition in days, the Event retention time must be strictly greater than the number of days specified in the storage condition. The cold storage duration for events is calculated as the Event retention time minus the number of days specified in the Storage condition options setting.

If you do not want to store events from this space on the cold storage disk, you can specify the same number of days in the Event retention time field as in the storage condition.

- If you specified the storage condition in terms of disk size (absolute or percentage), the minimum value of the Event retention time is 1. The cold storage duration for events is calculated as Event retention time minus the number of days from the receipt of the event to triggering of the condition and the disk partition filling up, but until the condition is triggered, calculating an exact duration is impossible. In this case, we recommend specifying a relatively large value for Event retention time to avoid events being deleted.

If you do not want to store events from this space on the cold storage disk, you can set Event retention time to 0.

The Event retention time setting becomes available only after adding at least one cold storage disk.

- If you specified a storage condition in days, the Event retention time must be strictly greater than the number of days specified in the storage condition. The cold storage duration for events is calculated as the Event retention time minus the number of days specified in the Storage condition options setting.

- In the Filter settings section, you can specify conditions to identify events that will be put into this space. To create a new filter, in the Filter drop-down list, select an existing filter or Create new.

After the service is created, you can view and delete spaces in the storage resource settings.

There is no need to create a separate space for audit events. Events of this type (Type=4) are automatically placed in a separate Audit space with a storage term of at least 365 days. This space cannot be edited or deleted from the KUMA Console.

- If necessary, in the Disks for cold storage section, add to the storage the disks where you want to transfer events from the ClickHouse cluster for long-term storage.

There can be multiple disks. You can add disks by clicking the Add disk button and remove them by clicking the Delete disk button.

Available settings:

- In the FQDN drop-down list, select the type of domain name of the disk you are connecting:

- Local—for the disks mounted in the operating system as directories.

- HDFS—for the disks of the Hadoop Distributed File System.

- In the Name field, specify the disk name. The name must contain 1 to 128 Unicode characters.

- If you select the Local domain name type for the disk, specify the absolute directory path of the mounted local disk in the Path field. The path must begin and end with a "/" character.

- If you select HDFS domain name type for the disk, specify the path to HDFS in the Host field. For example,

hdfs://hdfs1:9000/clickhouse/.

- In the FQDN drop-down list, select the type of domain name of the disk you are connecting:

- Go to the Advanced settings tab and fill in the following fields:

- In the Buffer size field, enter the buffer size in bytes at which events must be sent to the database. The default value is 64 MB. No maximum value is configured. If the virtual machine has less free RAM than the specified Buffer size, KUMA sets the limit to 128 MB.

- In the Buffer flush interval field, enter the time in seconds for which KUMA waits for the buffer to fill up. If the buffer is not full, but the specified time has passed, KUMA sends events to the database. The default value is 1 second.

- In the Disk buffer size limit field, enter a value in bytes. The disk buffer is used to temporarily store events that could not be sent for further processing or storage. If the disk space allocated for the disk buffer is exhausted, events are rotated as follows: new events replace the oldest events written to the buffer. The default value is 10 GB.

- Use the Disk buffer toggle switch to enable or disable the disk buffer. By default, the disk buffer is enabled.

- Use the Write to local database table toggle switch to enable or disable writing to the local database table. Writing is disabled by default.

If enabled, data is written only on the host on which the storage is located. We recommend using this functionality only if you have configured balancing on the collector and/or correlator — at step 6. Routing, in the Advanced settings section, the URL selection policy field is set to Round robin.

If you disable writing, the data is distributed across the shards of the cluster.

- If necessary, use the Debug toggle switch to enable logging of service operations.

- You can use the Create dump periodically toggle switch at the request of Technical Support to generate resource (CPU, RAM, etc.) utilization reports in the form of dumps.

- In the Dump settings field, you can specify the settings to be used when creating dumps. The specifics of filling in this field must be provided by Technical Support.

The set of resources for the storage is created and is displayed under Resources → Storages. Now you can create a storage service.

Page topCreating a storage service in the KUMA Console

When a set of resources is created for a storage, you can proceed to create a storage service in KUMA.

To create a storage service in the KUMA Console:

- In the KUMA Console, under Resources → Active services, click Add service.

- In the opened Choose a service window, select the set of resources that you just created for the storage and click Create service.

The storage service is created in the KUMA Console and is displayed under Resources → Active services. Now storage services must be installed to each node of the ClickHouse cluster by using the service ID.

Page topInstalling a storage in the KUMA network infrastructure

To create a storage:

- Log in to the server where you want to install the service.

- Execute the following command:

sudo /opt/kaspersky/kuma/kuma storage --core https://<KUMA Core server FQDN>:<port used by KUMA Core for internal communication (port 7210 by default)> --id <service ID copied from the KUMA web console> --installExample:

sudo /opt/kaspersky/kuma/kuma storage --core https://kuma.example.com:7210 --id XXXXX --installWhen deploying several KUMA services on the same host, during the installation process you must specify unique ports for each component using the

--api.port <port>parameter. The following setting values are used by default:--api.port 7221. - Repeat steps 1–2 for each storage node.

Only one storage service can be installed on a host.

The storage is installed.

Page top

button.

button.